Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

What if your tests could think for themselves – fix what breaks, predict where bugs will land, and evolve with your code? With accelerated DevOps pipelines, shrinking sprint cycles, and multi-platform complexity, QA teams are being asked to do the impossible: test more, test faster, and catch every bug before it hits production.

That’s why AI in software testing is more than essential – a transformative leap from reactive scripts to proactive intelligence. According to the World Quality Report, 75% of organizations consistently invest in AI and utilize it to optimize QA processes with 41% citing faster defect detection and 38% reporting improved test coverage as measurable benefits.

But let’s be clear: AI is not here to replace testers; it’s here to help them work faster, reduce repetitive tasks, and uncover risks earlier in the development cycle.

Traditional testing tools follow strict rules and require manual updates whenever the application changes. AI, on the other hand, can adapt. It learns from historical test data, monitors user interactions, and evolves as your codebase evolves. It recommends test cases, predicts where bugs might appear, and updates test scripts automatically when the UI or API changes.

This blog will show you exactly how AI is redefining software testing, where it fits in your workflow, and how you can adopt it – without overhauling your entire tech stack.

What Is AI in Software Testing?

AI in software testing refers to the integration of artificial intelligence techniques like – machine learning, natural language processing, and pattern recognition – into the software testing lifecycle. It transforms static, script-driven testing into an adaptive, intelligent process that evolves with your application.

Unlike traditional tools that break when the UI changes, or a selector is renamed, AI-powered platforms self-heal, learn from historical data, and predict risk areas – helping testers move faster, cover more ground, and stay ahead of failure.

For example, instead of manually writing 200 regression tests, AI can generate them based on code coverage gaps or user flows. It can also tell you which tests are worth running after a new commit, saving hours of CI time.

Related Read: Why QA Testing Matters in Software Development

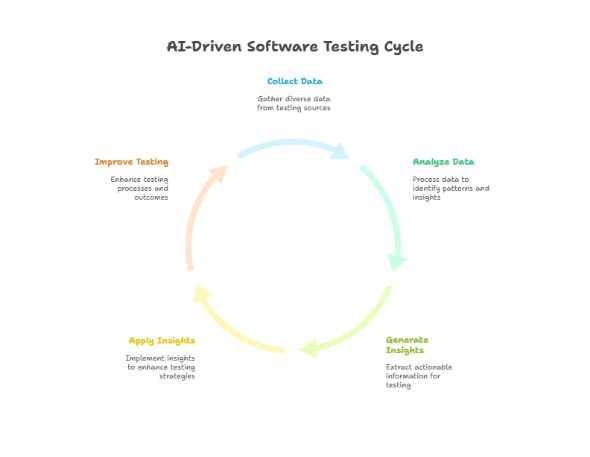

How Does AI Work in Software Testing

AI leverages vast amounts of structured and unstructured data from your test ecosystem – like execution logs, bug history, UI snapshots, and telemetry. It then applies algorithms to identify patterns, detect anomalies, and make predictions.

Here’s what AI typically ingests:

- Test execution logs

- Code commit history

- Defect reports

- UI and API telemetry

- User behavior analytics

From that, it delivers:

- Self-healing test scripts that adapt when UI locators or flows change

- Risk-based prioritization of test suites

- Automated generation of missing or edge-case tests

- Anomaly detection in performance or visual changes

- Predictive insights into where bugs are most likely to appear

Some tools even turn user stories or requirement documents into executable test cases using NLP. Others use computer vision to analyze screenshots and detect subtle UI defects. The more the AI sees and learns, the more value it delivers.

6 Reasons AI Testing Is Essential for Modern QA Teams

Software testing today faces increasing demand. Teams are releasing more frequently across more platforms, with tighter deadlines and higher quality expectations. Manual testing and traditional automation alone cannot scale to meet this pressure. AI-powered testing introduces intelligent automation that not only speeds up testing but also makes it more targeted, adaptable, and efficient.

Here are the key reasons to adopt AI in your QA strategy:

1. Faster Feedback Loops:

In fast-paced environments, speed matters. AI helps identify which test cases to run after each code change by analyzing commit history, code dependencies, and prior test outcomes. This enables:

- Faster identification of defects

- Reduced feedback cycles for developers

- Quicker validation of builds during CI/CD runs

Rather than running every test, AI ensures only the most relevant ones are executed, saving hours on every pipeline execution.

2. Smarter, More Complete Test Coverage

AI can detect gaps in your existing test suites by evaluating what has been tested and hasn’t been tested. It uses behavior analytics, usage data, and historical bug trends to suggest:

- Missing edge cases

- Under-tested user flows

- Redundant or low-value test cases

This leads to more robust coverage with fewer tests – and higher confidence in the results.

3. Lower Test Maintenance Overhead

One of the biggest challenges in automated testing is script maintenance. Small UI changes can cause large test failures, resulting in hours of rework. AI addresses this with self-healing automation, which can:

- Automatically update locators and selectors

- Adjust test flows to minor UI modifications

- Flag outdated or irrelevant scripts for cleanup

This reduces manual intervention and keeps your test suite resilient over time.

4. Risk-Based Test Prioritization

AI tools assess which parts of your system are more likely to break based on past defects, usage intensity, and recent code changes. This enables:

- Smarter test planning based on real risk

- Focused exploratory testing in high-impact areas

- Fewer missed bugs in critical components

Instead of testing everything equally, AI directs your attention to where it matters most.

5. More Efficient Resource Allocation

Running all tests, all the time, is neither practical nor necessary. AI helps optimize resource usage by:

- Recommending the smallest possible set of tests for maximum confidence

- Identifying duplicate or low-impact test cases

- Enabling intelligent scheduling and parallel execution

This makes better use of infrastructure and team time, especially in large-scale projects or organizations with limited environments.

6. Supports, Not Replaces, Human Testers

AI does not remove the need for human testers. It removes tedium. By handling repetitive or predictable tasks, AI frees up testers to:

- Explore edge cases

- Understand nuanced business logic

- Test for usability and accessibility

- Make informed decisions about go/no-go releases

Testers shift from being task executors to quality strategists – making AI a force multiplier, not a replacement.

Must Read Article: A Complete Guide to Building an AI-Ready Workforce

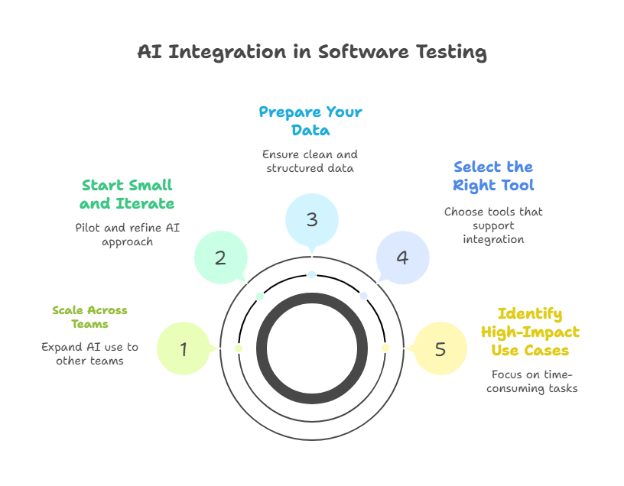

A Practical 5-Step Roadmap to Start Your AI Testing Journey

Getting started with AI doesn’t require a full tech overhaul. Here’s a practical 5-step roadmap:

1. Identify High-Impact Use Cases

Begin by focusing on areas where manual testing drains the most time – like regression testing, flaky scripts, or repetitive test case creation. These are ripe for automation and AI support. For example, regression testing often consumes over 40% of QA effort, yet much of it involves low-value, repetitive checks.

Stat to be noted: According to World Quality Report, almost two-thirds (65%) of organizations say higher productivity is the primary quality outcome for using AI.

2. Select the Right Tool

Choose an AI tool that fits your ecosystem – tech stack, CI/CD setup, and testing needs. Look for platforms with NLP for auto-generating test cases, ML-driven test selection, and self-healing scripts that adapt to UI changes. For instance, a digital bank integrated Functionize into their pipeline and improved test coverage by 35% while cutting maintenance time by 40%. The key is finding tools that work with you, not just sound impressive.

3. Prepare Your Data

AI thrives on clean, structured data. Feed it logs, past defects, test results, and code coverage insights to help it learn what matters. Avoid noisy data – like inconsistent logs or untagged defects – which can reduce model accuracy.

4. Start Small and Iterate

Run a pilot with one project or module to test the waters. Track KPIs like defect detection, test run time, and coverage. A logistics startup applied AI only to API testing and saw a 3x faster CI/CD pipeline by running fewer but smarter tests. Starting small helps teams fine-tune their approach before scaling up.

5. Scale Across Teams

Once the model proves effective, extend it to other teams and apps. Provide training so testers can interpret AI insights and offer feedback. Successful teams embed AI into their culture – treating it as a co-pilot, not a black box. This helps scale intelligently while keeping quality front and center.

AI Testing Use Cases: From Test Generation to Self-Healing Scripts

AI in testing can touch nearly every part of the QA process. Here are the most common (and highest ROI) areas:

1. Test Case Generation from Requirements

AI can convert plain English requirements or user stories into test cases using Natural Language Processing (NLP). This drastically reduces the time QA teams spend manually creating tests. For example, Quinnox’s Intelligent Quality-as-a-software platform, Qyrus can interpret Gherkin-style inputs and auto-generate functional test cases. This is particularly useful in Agile environments, where rapid story-to-test conversion accelerates sprint cycles.

2. Test Case Prioritization

Instead of running every test with each code change, AI algorithms can analyze change history, code coverage, and defect density to prioritize the most relevant tests. This helps teams focus on high-risk areas first. Companies have reported up to 40% reduction in test execution time using AI-based test selection – especially useful for large-scale regression suites.

3. Defect Prediction

AI models trained on historical defect data can predict which modules are most likely to break in future releases. This helps QA teams focus their efforts on where it matters most. For instance, an enterprise SaaS provider used AI to analyze commit history and bug patterns, leading to a 30% increase in early bug detection in critical areas.

4. Self-Healing Test Scripts

UI-based tests often break when minor front-end changes are introduced. AI-powered tools can automatically identify changes in the DOM or element properties and update test scripts without manual intervention. This reduces flaky tests and maintenance overhead. Companies using self-healing tools report up to 70% less test script rework.

5. Visual Testing with AI

AI can detect visual inconsistencies like layout shifts, color mismatches, or misaligned elements – that traditional assertion-based testing might miss. Visual AI tools compare UI snapshots pixel by pixel, factoring in browser types and device responsiveness. This is especially valuable for UX-driven products like e-commerce or mobile apps.

6. Intelligent Test Data Generation

Generating diverse, production-like test data is critical for robust testing. AI can simulate real user behavior and create synthetic yet realistic test data that covers edge cases. This helps prevent data bias and improves test coverage. Financial services firms, for instance, use AI to mimic transaction patterns for more accurate fraud testing.

7. Anomaly Detection in Test Results

Instead of manually reviewing logs or dashboards, AI can flag abnormal patterns – like sudden spikes in response time or memory usage – before they escalate. It can learn from historical trends and alert teams proactively. This is particularly effective in performance testing and post-deployment monitoring.

AI vs Manual Testing: What Testers Still Do Best

AI may be transforming testing, but it doesn’t replace the human brain – it amplifies it. While AI thrives in automating repetitive tasks, mining patterns, and accelerating feedback loops, there are still critical areas where human testers are irreplaceable.

Here’s what AI can’t replicate - yet:

- Interpreting Ambiguous Requirements: Business logic isn’t always black and white. Testers bring context, domain knowledge, and the ability to ask the right questions when stories are vague or incomplete.

- Exploratory Testing & Creative Thinking: AI follows rules. Humans break them – intentionally. Exploratory testing thrives on intuition, curiosity, and unpredictability – traits AI hasn’t mastered.

- Evaluating User Experience (UX): A test case can verify that a button works, but only a human can judge whether it’s intuitive. Usability, emotional impact, and friction in the flow still require human empathy and design literacy.

- Making Go/No-Go Calls: When a release is on the line, AI can provide data – but the decision to ship often involves business trade-offs, user sentiment, and nuanced judgment calls that only humans can make.

The most effective QA teams don’t choose between AI and humans – they combine them. Let AI handle the scale and speed; let testers handle the strategy and subtlety. That’s the future of testing: augmented, not automated.

Best Practices for AI in Software Testing

AI testing can drive significant improvements in speed, accuracy, and scalability – but only when applied thoughtfully. To make the most of AI-powered tools and avoid wasted effort, follow these foundational best practices:

- Start with Clear Goals

Before introducing any AI tool or capability, define what success looks like. Are you trying to reduce the test cycle time? Improve coverage in critical flows? Eliminate maintenance overhead?

Clear goals help you:

- Choose the right use cases and tools

- Set measurable expectations for adoption

- Align stakeholders and development teams

Avoid vague ambitions like “add AI to testing.” Instead, aim for objectives such as “reduce regression execution time by 50 percent in the next quarter” or “automate visual validation for core user journeys.”

- Feed Clean, Relevant Data

AI models are only as effective as the data they are trained on. If your test logs are outdated or your defect records inconsistent, the AI’s recommendations will be unreliable.

To get the best results:

- Clean up old or redundant test cases

- Tag test results and bugs consistently

- Provide clear test execution histories and coverage metrics

- Remove noise or irrelevant data from your pipelines

Think of data as fuel. High-quality fuel produces better performance, fewer false alarms, and smarter prioritization.

- Involve QA Early and Often

AI is not meant to replace QA teams. It is meant to augment them. But to do that effectively, testers need to play an active role – not just as tool users, but as decision-makers.

- Bring QA into the tool selection and setup process

- Train them to interpret and refine AI suggestions

- Encourage testers to provide feedback loops into the system

- Make their domain knowledge part of how the AI learns

When testers guide AI instead of reacting to it, the outcomes are far more useful and trustworthy.

- Track ROI with Real Metrics

To justify the investment and refine your strategy, measure the actual impact AI is having on your testing process. Monitor KPIs such as:

- Time saved on test execution or maintenance

- Improvements in defect detection rates

- Reduction in test suite bloat or redundancies

- Increases in coverage across critical flows

Regular ROI tracking helps you validate progress, identify gaps, and demonstrate value to leadership.

- Recalibrate Regularly

AI models adapt and learn, but they can also drift or degrade over time – especially if your systems or workflows change. Make periodic recalibration part of your routine:

- Review AI-generated test cases and recommendations

- Audit false positives and irrelevant alerts

- Fine-tune the model with updated test data and parameters

- Involve testers in regular quality reviews of AI output

This keeps the AI aligned with your goals and responsive to evolving project needs.

Common Pitfalls to Avoid in AI-Powered Testing

AI can be a game-changer in software testing, but only when implemented with clear intent, clean data, and team alignment. Without these, AI initiatives risk falling short or even creating new problems. Here are some of the most common pitfalls—and how to avoid them:

Treating AI as a Silver Bullet

AI is powerful, but it is not a plug-and-play miracle. It will not instantly fix your test bottlenecks, flaky scripts, or incomplete coverage unless you apply it with a clear strategy.

What to avoid:

- Blindly automating everything

- Assuming AI will figure it out without human input

- Skipping test design and relying fully on machine-generated cases

What to do instead: Treat AI as a tool in your toolbox. Pair it with thoughtful planning, experienced QA insight, and measurable goals.

Ignoring Data Hygiene

Poor data leads to poor decisions. If your test logs are inconsistent, your bug reports lack detail, or your automation results are full of false positives, AI will learn the wrong lessons.

What to avoid:

- Feeding outdated or irrelevant test cases into AI models

- Using untagged or duplicated test results

- Relying on sparse or biased defect histories

What to do instead: Curate your data. Clean up noisy logs, label your defects clearly, and ensure your test history reflects current application behavior. AI thrives on quality, not quantity.

Automating the Wrong Things

Just because you can automate a test doesn’t mean you should. Some scenarios are better left to human intuition – especially those involving exploratory testing, user experience validation, or highly dynamic content.

What to avoid:

- Trying to automate tests with unpredictable outcomes

- Creating AI-driven scripts for low-priority, one-time scenarios

- Replacing critical manual testing with brittle automation

What to do instead: Use AI to support repeatable, high-volume test cases where efficiency matters. Let humans focus on logic, ambiguity, and creativity—things machines still struggle with.

Excluding Your QA Team

AI is not a black box. If testers are not involved in configuring, interpreting, and fine-tuning the tool, it will not gain trust or traction.

What to avoid:

- Handing AI tools to QA teams without training or context

- Making decisions based solely on engineering or leadership input

- Keeping testers in a passive, observer role

What to do instead: Involve your QA team from day one. Train them to collaborate with AI, provide feedback, and take ownership of its outputs. AI works best when paired with experienced human testers who know the system inside and out.

How Quinnox’s Intelligent Quality (IQ) Approach Helps You Leverage AI in Testing

Quinnox’s Intelligent Quality (IQ) is a holistic approach for enterprises that require speed, precision, and scalability across fast-evolving digital environments. It brings together AI-driven automation, intelligent agents, and a no-code testing experience to simplify and strengthen every phase of the testing lifecycle.

Key capabilities include:

- Agentic AI that autonomously generates, explores, and adapts test cases using contextual understanding

- No-code automation that allows business users and testers to create and manage test scenarios without writing scripts

- Autonomous test generation from business requirements, enabling faster coverage with minimal manual input

- Real-device and browser farm execution for testing at scale across operating systems, browsers, and mobile platforms

- Built-in observability offering live insights into performance, coverage, and quality risks

- Continuous learning through test-cycle feedback, enhancing accuracy and reducing future defects

- Native CI/CD integrations for seamless adoption in DevOps pipelines and enterprise environments

These features make Quinnox IQ ideal for complex, high-impact sectors like banking, insurance, and retail, where quality at speed is non-negotiable.

At the heart of IQ is our Shift SMART framework, which helps enterprises to detect defects early, reduce test maintenance, and accelerate releases without compromising quality.

Here’s what sets Shift SMART apart:

- 50% reduction in testing costs.

- 50% increase in test coverage.

- 45% fewer production defects.

- 25% faster testing cycles.

Curious how this actually works in real-world enterprise testing? We bottled the strategy, stats, insights, and real-world success stories into this one unmissable report. [Download the full report] – Get the inside look at how this framework is helping enterprises shift from traditional testing to true quality engineering.

So, ready to future-proof your QA? Book a 1:1 consultation and explore how Quinnox can help you scale intelligent testing across your enterprise.

FAQs about AI in Testing

AI in software testing refers to the use of artificial intelligence technologies—such as machine learning (ML), natural language processing (NLP), and pattern recognition—to enhance and automate the software testing process. Rather than relying on rigid test scripts, AI learns from historical data, user behavior, code changes, and test results to improve test coverage, prioritize tests, and detect defects more intelligently. It allows testing to become faster, smarter, and more adaptive.

AI boosts accuracy and speed by prioritizing critical tests, predicting defects, and auto-healing scripts when UI changes. It also helps generate tests from user stories and detects anomalies that manual testing might miss—leading to faster cycles and fewer false positives.

AI testing delivers faster feedback, better coverage, and lower maintenance. It reduces redundant runs, improves accuracy, and even enables non-technical team members to contribute—resulting in higher quality with less effort.

AI depends on quality data and proper setup. It lacks domain intuition and needs human oversight to avoid irrelevant tests. Legacy environments may pose integration challenges, and overreliance can risk missing bugs. AI works best when paired with human expertise and an iterative, feedback-driven approach.

Start small—target regression or flaky tests. Choose tools with AI features like NLP and self-healing. Clean your test data, run a pilot, measure impact, and train your team to collaborate with AI insights. With the right foundation, most teams can adopt AI gradually and see early wins within weeks.