Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

Data migration is a strategic imperative-whether you’re modernizing legacy systems, moving to the cloud, or consolidating platforms. But its success depends entirely on one thing: a robust and reliable validation process.

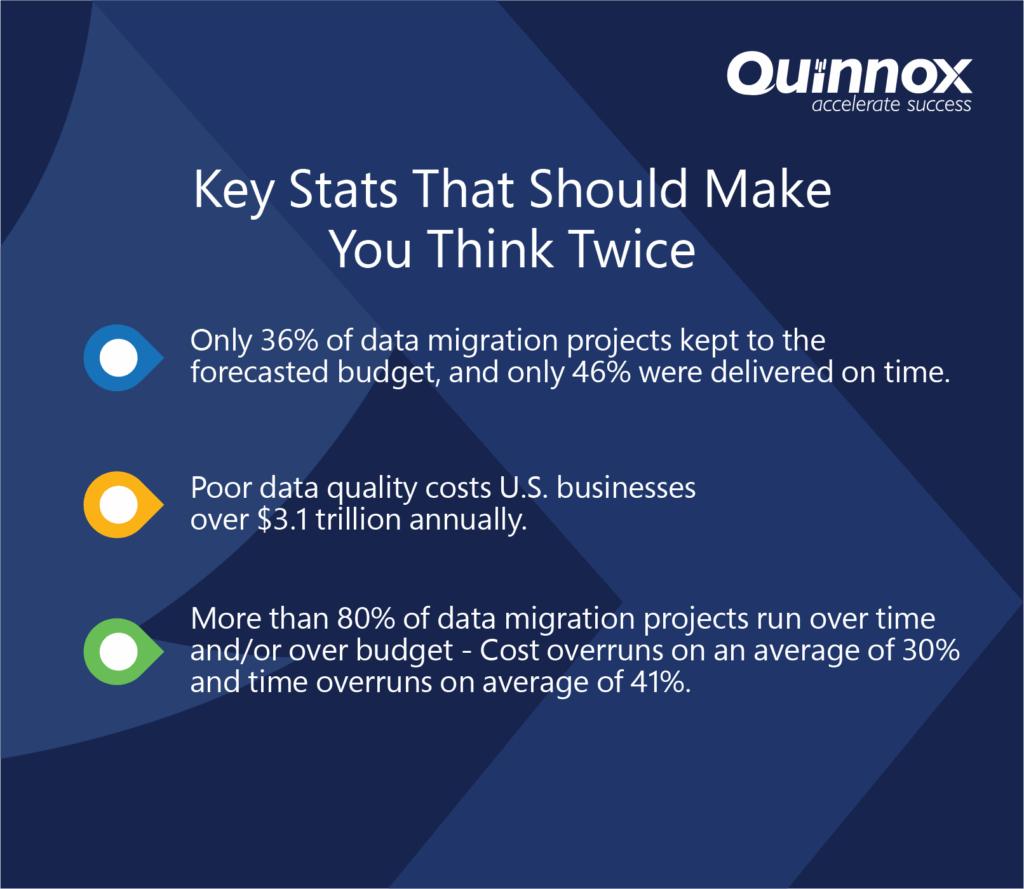

According to Oracle, 83% of data migration projects either fail or exceed their budgets and timelines, primarily due to poor planning and inadequate validation mechanisms. Another study by Experian reveals that 95% of businesses suspect their data might be inaccurate, yet only 44% have a consistent approach to data quality checks across their systems.

This lack of data confidence is costing organizations big — bad data is estimated to cost companies $12.9 million annually, both in lost productivity and missed opportunities (Gartner). And in sectors like banking, healthcare, and telecom, a single data mismatch during migration can result in compliance failures, customer churn, and even legal liabilities.

“Data migration without validation is like deploying code without testing — it’s a risk you can’t afford.”

Despite being a critical phase, data migration validation is often under-prioritized, poorly executed, or done too late in the process. And yet, getting it right isn’t just about preventing failures, it’s about building trust in your systems, maintaining business continuity, and setting a strong foundation for future growth.

In this blog, we’ll demystify what data migration validation really entails, explore techniques to do it effectively, unpack common challenges, and share industry best practices backed by real-world examples — so you can migrate with confidence and precision.

A recent survey found that 48% of M&A professionals are now using AI in their due diligence processes, a substantial increase from just 20% in 2018, highlighting the growing recognition of AI’s potential to transform M&A practices.

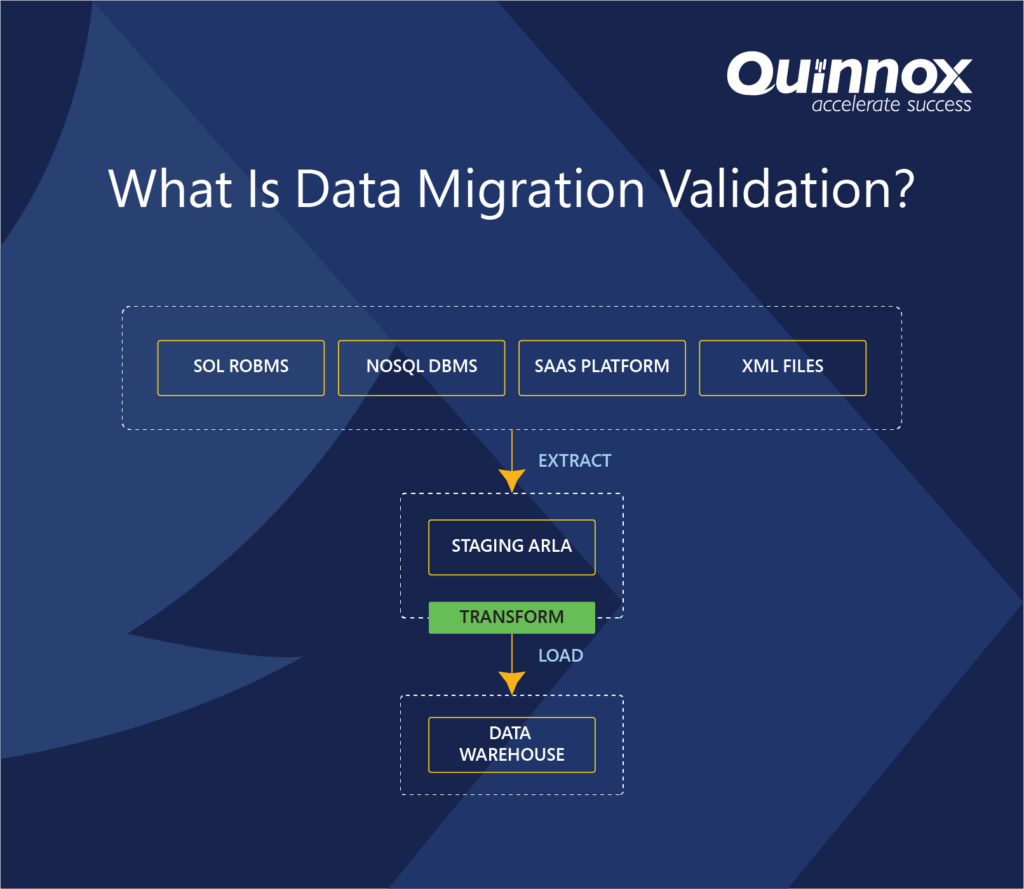

What Is Data Migration Validation?

Data migration validation is the process of ensuring that data has been accurately, completely, and securely transferred from a source system to a destination system during a migration project. It involves verifying that:

- All required data has been moved

- The data remains consistent and uncorrupted

- The structure, format, and relationships are preserved

- The destination system can use the data as expected

Think of it like moving houses. It’s not enough to just load the boxes onto the truck. You have to make sure everything arrives at the new home intact, organized, and ready to be used.

Why Data Migration Validation Is a Business Imperative

Data migration validation is essential because it ensures the integrity, accuracy, and usability of your data when moving from one system to another. Without it, you’re essentially gambling with one of your most valuable assets—your data.

When migrations aren’t properly validated:

- Critical data can get lost, corrupted, or mismatched, leading to faulty analytics, broken applications, and incorrect business decisions.

- Regulatory compliance may be compromised, exposing your organization to legal penalties, especially under data privacy laws like GDPR or HIPAA.

- Operations can be disrupted, as systems relying on clean, accurate data may fail or behave unpredictably.

- Customer trust and brand reputation can suffer, particularly if data issues affect user experience, billing, or communication.

Given that a high percentage of migration projects fail or go over budget—and many do so due to data quality issues—validating data throughout the migration lifecycle is not optional. It’s a must for protecting business continuity, ensuring compliance, and preserving stakeholder trust.

In short: Data migration validation isn’t just a technical checkpoint—it’s a strategic safeguard for your business.

The Three Phases of Data Migration Validation

Understanding how to validate data means embedding validation into every stage of the migration lifecycle. Here’s how:

1. Pre-Migration Validation (Plan & Profile)

Before any data moves, validate the source data:

- Data profiling: Identify anomalies, missing values, duplicates, and inconsistencies.

- Schema validation: Ensure source schema is compatible with the target system.

- Data mapping rules: Define and test transformation logic.

Best Practice: Use profiling tools to automatically detect data quality issues before migration begins.

2. In-Migration Validation (Test & Track)

While data is being migrated, continuously verify:

- Record-level checks: Ensure every record is moved and transformed correctly.

- Row counts and checksum validation: Use hash totals to verify data integrity.

- ETL testing: Validate Extract, Transform, Load (ETL) pipelines for accuracy.

Best Practice: Implement parallel run testing—run both old and new systems simultaneously and compare outputs for a sample period.

3. Post-Migration Validation (Audit & Confirm)

After the migration:

- Reconciliation reports: Match source and target records.

- Functional testing: Ensure business applications behave as expected with new data.

- User acceptance testing (UAT): Involve business users to confirm operational continuity.

Best Practice: Use automation frameworks for regression testing to accelerate post-migration validation.

Techniques Used for Data Validation Migration:

- Record Count Matching: Ensures that the number of records in the source matches the target.

- Data Sampling: Random sampling and validation of records (statistical approach).

- Checksums/Hashing: Compares checksums of data files pre- and post-migration.

- Schema Validation: Ensures that fields, data types, and constraints are intact.

- Referential Integrity Checks: Ensures relationships (e.g., foreign keys) are preserved.

- Automated Regression Testing: Validates that business processes using data still work correctly.

Key Stats That Should Make You Think Twice

If you’re not validating data effectively, you’re essentially gambling with your organization’s operational integrity.

Common Challenges in Data Migration Validation

Despite planning, organizations face significant hurdles during validation. Understanding these early on helps prevent downstream risks.

- Volume and Complexity of Data: Large-scale migrations (e.g., terabytes) make full validation time-consuming and resource intensive.

- Data Quality Issues in Source System: Legacy systems often have inconsistent, duplicate, or incomplete data.

- Lack of Automated Validation Tools: Manual validation is error-prone and not scalable.

- Inconsistent Data Formats: Source and target may use different date/time formats, character sets, or units.

- Schema Mismatches: Even minor changes like altered column names or added constraints can cause mismatches.

- Insufficient Testing Resources: Lack of skilled QA personnel for data testing.

- Tight Migration Timelines: Businesses often expect minimal downtime, reducing time for thorough validation.

Best Practices for Successful Data Migration Validation

Step 1: Start Validation Early and Integrate It Across the Lifecycle

Validation isn’t something you save for the end — it starts well before migration begins. Early profiling can uncover gaps like missing IDs, duplicates, or non-standard formats that could derail your project later. For example, a global bank identified that 20% of its customer records lacked valid identifiers during the planning phase — fixing it upfront helped them avoid regulatory issues down the line.

By validating across every stage — from planning through to post-migration — you ensure issues are caught in real time, not after the damage is done.

Step 2: Automate Wherever Possible to Boost Speed and Accuracy

Manual validation is time-intensive, error-prone, and unsustainable at scale. Automation introduces consistency, speed, and repeatability across validation tasks. Automated tools can perform large-scale data comparisons, verify transformation logic, generate discrepancy reports, and streamline testing cycles. This not only improves efficiency but also strengthens audit readiness and reduces dependency on manual interventions.

Step 3: Validate Business Logic, Not Just Fields

Beyond checking for structural accuracy, validation must ensure that business logic and operational rules remain intact after migration. This includes verifying that calculations, relationships, conditions, and decision logic encoded within the data function as expected in the target environment. Functional alignment is essential to ensure the new system delivers consistent, business-relevant outcomes.

Step 4: Make It a Cross-Functional Effort

Effective data validation requires collaboration between technical teams and business stakeholders. While IT manages structural and transformation accuracy, business users validate the data’s usability and relevance. Involving data governance, compliance, and subject matter experts ensures that validation is comprehensive and aligned with both technical standards and regulatory requirements.

Step 5: Establish Measurable Validation KPIs and Monitor Them

You can’t manage what you don’t measure. Define measurable KPIs for data validation — such as error rates, reconciliation percentages, audit findings, and time-to-resolution — to track progress and identify bottlenecks. Regularly monitoring these metrics ensures early detection of issues and provides transparency for leadership and audit teams. Establishing a KPI-driven validation framework not only improves quality assurance but also builds confidence across the organization that migration outcomes are reliable and audit-ready.

Step 6: Document Everything for Traceability and Compliance

Comprehensive documentation is essential for traceability, compliance, and rollback preparedness. This includes records of test plans, validation results, data mappings, error logs, and sign-off approvals. Well-maintained documentation supports audits, facilitates troubleshooting, and ensures accountability throughout the migration process.

Step 7: Prepare for the Worst — Design Rollback and Recovery Options

No matter how thorough the validation, there must be a contingency plan in place. A rollback and recovery strategy enables swift restoration of data or systems in case of validation failure or unforeseen issues post-migration. This includes predefined rollback procedures, backup plans, and clear ownership to ensure minimal disruption and data loss.

Need Help with Data Migration Validation?

In the era of cloud transformation, real-time decisioning, and AI-powered experiences, data is the fuel that keeps your business running. But just like contaminated fuel can stall an engine, unvalidated or mishandled data can paralyze operations, mislead strategy, and shatter customer trust.

Data migration validation is your safeguard. It ensures the journey from legacy to modern, on-prem to cloud, or siloed to unified isn’t just about moving bytes—but about preserving the value, integrity, and usability of your most critical asset.

At Quinnox, we embed intelligent validation capabilities into every step of your migration journey. Powered by our intelligent application management platform, Qinfinite we automate validation at scale, apply AI for anomaly detection, and ensure compliance from day one. Our approach blends speed with precision, enabling you to migrate confidently without compromising data quality or governance.

Because in today’s world, it’s not just about moving fast—it’s about moving smart. And with the right validation strategy, powered by the right partner, your next migration project won’t just succeed—it’ll set a new standard.

Dynamic Risk Assessment: In telecom, data privacy regulations (like GDPR) are crucial. AI assesses the impact of privacy regulations on customer data handling practices, ensuring compliance without compromising service.

Example: AI helps telecom providers audit data storage practices to align with GDPR, ensuring customer privacy and regulatory adherence.

5. Retail

Automated Policy and Document Updates: Retailers must adapt to consumer protection and employee rights regulations. AI updates internal policies based on regulatory changes, keeping customer interactions and employee practices compliant.

Example: AI generates new training material for customer service teams when consumer rights regulations are updated, ensuring compliance with minimal manual effort.

With iAM, every application becomes a node within a larger, interconnected system. The “intelligent” part isn’t merely about using AI to automate processes but about leveraging data insights to understand, predict, and improve the entire ecosystem’s functionality.

Consider the practical applications:

Real-World Example: Enhancing Customer Service in Finance

Consider a large financial institution seeking to improve its customer service experience. By leveraging Agent Management Services, the institution can:

- Deploy a network of Al-powered agents capable of handling a wide range of customer inquiries, such as account balance inquiries, transaction history checks, and basic support requests.

- Train and optimize these agents to accurately understand customer intent, provide timely and helpful responses, and even anticipate customer needs proactively.

- Ensure the security and compliance of these agents, safeguarding sensitive customer data and adhering to strict financial regulations.

- Continuously monitor and refine agent performance, identifying areas for improvement and making necessary adjustments to optimize the customer experience.

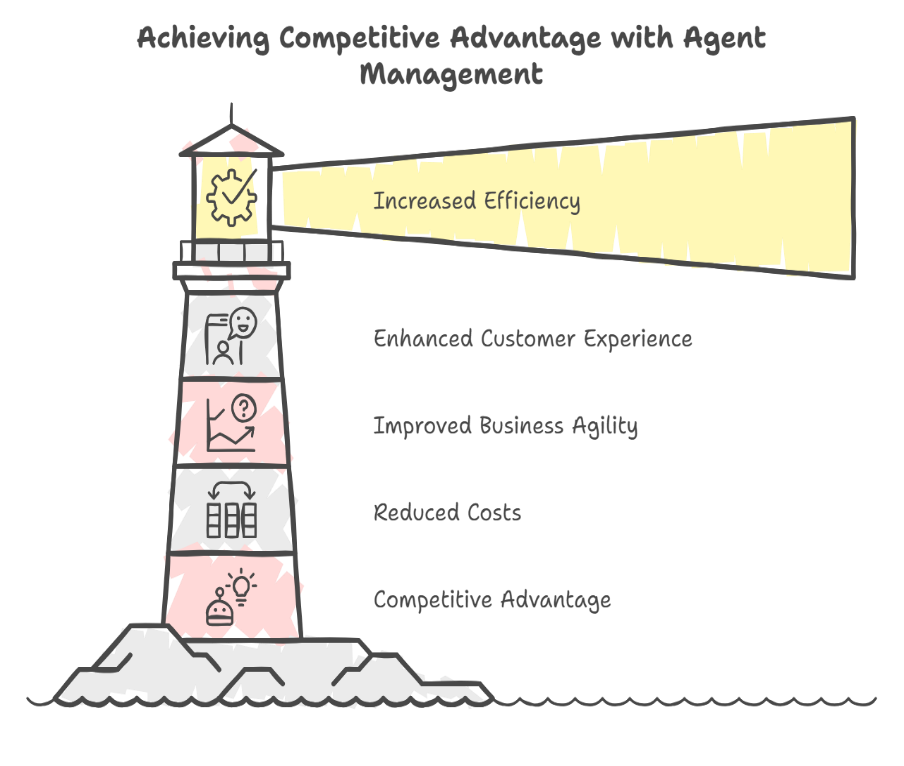

- Increased Efficiency and Productivity: Automating routine tasks and streamlining workflows, freeing up human resources for more strategic initiatives.

- Enhanced Customer Experience: Delivering personalized and efficient customer service, improving customer satisfaction and loyalty.

- Improved Business Agility: Enabling faster response times to changing market conditions and customer demands.

- Reduced Costs: Optimizing resource allocation and minimizing operational expenses.

- Competitive Advantage: Gaining a competitive edge by leveraging the power of Al and automation to innovate and deliver superior products and services.

Conclusion:

The rise of agent-driven systems marks a significant shift in the technology landscape. By embracing Agent Management Services, organizations can navigate this new era with confidence, unlock the full potential of AI, and gain a competitive advantage in the digital age.

Qinfinite’s Auto Discovery continuously scans and maps your entire enterprise IT landscape, building a real-time topology of systems, applications, and their dependencies across business and IT domains. This rich understanding of the environment is captured in a Knowledge Graph, which serves as the foundation for making sense of observability data by providing vital context about upstream and downstream impacts.

2. Deep Data Analysis for Actionable Insights:

Qinfinite’s Deep Data Analysis goes beyond simply aggregating observability data. Using sophisticated AI/ML algorithms, it analyzes metrics, logs, traces, and events to detect patterns, anomalies, and correlations. By correlating this telemetry data with the Knowledge Graph, Qinfinite provides actionable insights into how incidents affect not only individual systems but also business outcomes. For example, it can pinpoint how an issue in one microservice may ripple through to other systems or impact critical business services.

3. Intelligent Incident Management: Turning Insights into Actions:

Qinfinite’s Intelligent Incident Management takes observability a step further by converting these actionable insights into automated actions. Once Deep Data Analysis surfaces insights and potential root causes, the platform offers AI-driven recommendations for remediation. But it doesn’t stop there, Qinfinite can automate the entire remediation process. From restarting services to adjusting resource allocations or reconfiguring infrastructure, the platform acts on insights autonomously, reducing the need for manual intervention and significantly speeding up recovery times.

By automating routine incident responses, Qinfinite not only shortens Mean Time to Resolution (MTTR) but also frees up IT teams to focus on strategic tasks, moving from reactive firefighting to proactive system optimization.

Did you know? According to a report by Forrester, companies using cloud-based testing environments have reduced their testing costs by up to 45% while improving test coverage by 30%.

FAQ’s Related to Data Migration Validation

Data migration validation is the process of checking that data has been accurately, completely, and securely transferred from the source system to the target system during a migration.

Validation ensures data integrity, prevents errors, supports compliance, and helps avoid costly business disruptions caused by missing, corrupt, or misaligned data.

Validation should occur throughout the migration lifecycle—before, during, and after migration—to catch issues early and ensure consistent data quality at every step.

* Start early and validate continuously

* Automate wherever possible

* Involve business and IT stakeholders

* Validate both data structure and business logic

* Document every step for traceability and compliance

* Validation checks if data is accurate, complete, and usable in the new system.

* Verification confirms that data was correctly transferred or processed, often by comparing source and target records.