Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

In the modern digital landscape, data has emerged as a cornerstone of innovation, decision-making, and competitive advantage. However, the value of data lies not in its volume, but in its quality. According to a Gartner report, poor quality data costs organizations an average of $12.9 million per year.

As organizations increasingly integrate Artificial Intelligence (AI) into their core operations, the importance of data quality has reached new heights. No No matter how advanced an AI system may be, its output is only as good as the information it’s built on. Without data that is accurate, consistent, timely, and relevant, even the most promising AI initiatives are bound to fail. Gartner (via VentureBeat) reports that 85% of AI projects fail due to poor or insufficient data, an alarming statistic that emphasizes the urgent need for smarter data management strategies.

This is where the use of AI for data quality becomes significant for efficient data management. Unlike traditional methods, AI-powered solutions can automatically detect anomalies, correct errors, and harmonize disparate datasets at scale to make it AI-ready.

This blog explores how AI itself is revolutionizing the way businesses manage data quality, turning traditional challenges into opportunities for smarter, faster, and more reliable data governance that lays the foundation for the success of AI initiatives in the long-run.

A recent survey found that 48% of M&A professionals are now using AI in their due diligence processes, a substantial increase from just 20% in 2018, highlighting the growing recognition of AI’s potential to transform M&A practices.

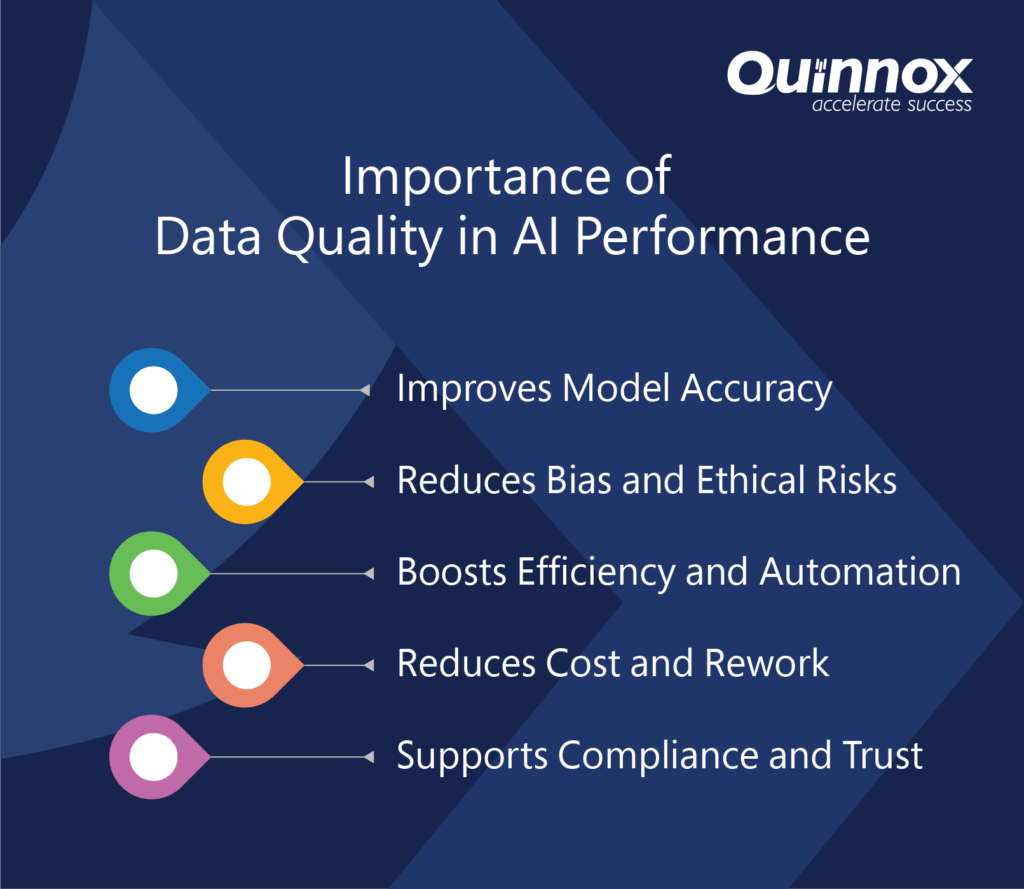

The Imperative of Data Quality in AI-Driven Systems

AI systems rely heavily on data for training models, making predictions, and automating decisions. However, even the most sophisticated AI algorithms cannot compensate for poor data quality that often consists of incomplete, erroneous or biased data, leading to flawed insights, reduced trust, and costly errors.

Consider a healthcare AI model designed to predict patient outcomes. If the input data contains inaccuracies or missing values, the predictions might fail, putting lives at risk. Similarly, in finance, AI models fed with inconsistent transaction data may miss fraudulent activities or flag false positives, damaging both customer trust and compliance efforts.

As AI becomes pervasive across industries, ensuring high-quality data becomes an essential foundation for AI’s success. The question then becomes: How can organizations effectively maintain data quality in an era where data is growing exponentially in volume, velocity, and variety?

Traditional Data Quality Management: Limits and Challenges

Before AI took center stage in data management, organizations relied on manual processes, rule-based systems, and basic statistical methods to monitor and improve data quality. These approaches, while valuable, often struggle with scale, complexity, and speed. Some key limitations include:

- Manual Effort and Expertise: Data cleansing, validation, and enrichment require significant human intervention and specialized knowledge, making the process slow and prone to error.

- Static Rule Sets: Rule-based quality checks are inflexible. They cannot easily adapt to evolving data types or detect subtle anomalies outside predefined patterns.

- Fragmented Tools: Many organizations use disparate tools that don’t integrate seamlessly, leading to data silos and inconsistent quality standards.

- Volume and Velocity: The rapid data inflow, especially real-time streaming data often overwhelms traditional methods incapable of timely detection and correction.

Given these challenges, a new paradigm was necessary- one where the capabilities of AI are harnessed to enhance the very management of data quality itself.

AI as a Catalyst for Enhanced Data Quality Management

Artificial intelligence, by its very design, thrives on data. Yet, paradoxically, it is AI that now empowers organizations to manage data more intelligently. Through advanced algorithms, machine learning models, natural language processing (NLP), and automation, AI addresses the core pain points of data quality management with unprecedented effectiveness.

1. Intelligent Data Profiling and Anomaly Detection

AI-driven data profiling moves beyond simple statistics like averages or missing counts. Machine learning models analyze data patterns to understand the normal distribution of values across different dimensions, enabling the detection of subtle inconsistencies that traditional tools might miss.

For instance, unsupervised learning algorithms can flag anomalies such as sudden spikes, drops, or unusual combinations of attributes in datasets. These anomalies might indicate data corruption, entry errors, or emerging issues in data collection processes. Unlike rule-based systems, AI models continuously learn and adapt, improving detection over time without explicit reprogramming.

2. Automated Data Cleansing and Enrichment

AI excels at automating repetitive and complex tasks. Data cleansing which includes removing duplicates, correcting errors, and standardizing formats often consumes a disproportionate amount of time. AI-powered tools can automatically identify duplicates using fuzzy matching techniques, fill missing values by learning from historical data, and correct typographical errors with natural language understanding.

Moreover, AI can enrich datasets by integrating external data sources, extracting relevant entities from unstructured text, or categorizing information contextually. This enrichment ensures that datasets not only become cleaner but also more informative and actionable.

3. Contextual Data Quality Assessment

Data quality is rarely absolute; it depends heavily on context. For example, acceptable data thresholds differ between healthcare, finance, or retail sectors. AI enables contextual understanding through semantic analysis and domain-specific models. By interpreting the meaning of data fields and their relationships, AI systems can tailor quality checks to fit specific business rules and regulatory requirements.

This dynamic adaptability ensures that data quality standards remain relevant and robust even as organizational needs evolve.

4. Real-Time Monitoring and Alerts

In fast-paced environments, timely detection of data quality issues is critical. AI-powered streaming analytics monitor data flows in real time, instantly identifying deviations from expected patterns. When anomalies arise, automated alerts notify relevant stakeholders, enabling rapid investigation and remediation.

Such proactive monitoring helps prevent the downstream impact of bad data, minimizing disruption to AI models and business processes.

5. Root Cause Analysis and Predictive Insights

Beyond flagging errors, AI can help uncover underlying causes of data quality problems. By correlating various data attributes and operational metrics, machine learning models can pinpoint sources of inconsistency whether it’s a faulty data entry point, integration failure, or system malfunction.

Furthermore, predictive analytics forecast potential quality degradations before they happen, allowing organizations to take preventive action rather than reactive fixes.

Transforming Data Quality Governance with AI

Data quality governance traditionally revolves around setting policies, assigning responsibilities, and establishing standards to ensure data is accurate, complete, and reliable. However, integrating AI into this governance ecosystem fundamentally reshapes how organizations oversee, maintain, and improve data quality. AI does more than automate tasks; it fosters a dynamic, collaborative, and outcome-driven governance culture that scales with organizational growth and complexity.

Let’s talk about the different ways in which AI transforms data quality governance:

Enhancing Collaboration across Teams

One of the biggest hurdles in data quality governance is the siloed nature of data roles. Data engineers, analysts, and business users often operate with different tools, priorities, and understandings of what constitutes “quality” data. This disconnect can slow down issue resolution, breed misunderstandings, and dilute accountability.

How AI bridges this gap:

- Unified Visibility: AI-powered platforms consolidate data quality metrics and insights into accessible dashboards that update in real time. These dashboards aren’t just technical summaries; they translate raw data signals into understandable quality scores and flags.

- Shared Language: By providing explanatory insights such as why a dataset was flagged or how a particular anomaly affects a business outcome, AI tools help non-technical stakeholders grasp data issues clearly.

- Collaborative Workflows: Some AI tools enable commenting, issue tracking, and automated notifications within the data platform. This streamlines communication, making it easier for data engineers to prioritize fixes, analysts to validate data, and business teams to provide context.

- Fostering Accountability: When everyone can see the state of data quality transparently and track the status of remediation efforts, it encourages ownership and timely responses. And as a result, teams shift from usual blame games to collective problem-solving.

Result: A more cohesive governance model emerges, where technical and business teams work hand-in-hand, continuously monitoring and improving data quality with shared goals and trust.

Scaling with Data Growth

Enterprises today handle diverse data sources: cloud databases, IoT sensor streams, customer interactions, social media, and more. The volume, velocity, and variety of data create unprecedented challenges for traditional way of managing data quality.

AI’s role in scaling governance:

- Automation at Scale: AI automates routine quality checks and corrections across massive datasets, performing tasks that would be impossible or cost-prohibitive to do manually.

- Adaptive Learning: AI models learn evolving data patterns, automatically adjusting quality rules as new data types and sources are integrated without requiring manual reprogramming.

- Real-Time Processing: For streaming data from IoT devices or live customer systems, AI enables near-instant quality monitoring and anomaly detection, helping prevent poor-quality data from contaminating downstream systems.

- Resource Efficiency: By handling large-scale data quality operations autonomously, AI allows human experts to focus on strategic issues rather than firefighting, effectively scaling governance without a linear increase in staffing.

Result: As organizations embrace cloud, edge computing, and diverse data ecosystems, AI-driven governance ensures data quality management keeps pace seamlessly, enabling scalable, sustainable control.

Aligning Quality with Business Outcomes

The ultimate goal of data governance is to ensure that high-quality data drives better business decisions, compliance, customer experiences, and operational efficiency. Traditional data quality metrics tend to focus narrowly on technical dimensions like completeness or consistency, which don’t always correlate directly with business impact.

How AI brings business context into governance:

- Outcome-Oriented Metrics: AI platforms can link data quality issues to key business performance indicators. For example, they might correlate poor customer data quality with increased churn rates or connect inaccurate inventory data to stockouts and lost sales.

- Prioritization Frameworks: By quantifying the financial or operational impact of specific data issues, AI helps governance teams prioritize remediation efforts that deliver the highest return on investment.

- Compliance and Risk Management: AI models can identify data quality risks that threaten regulatory compliance such as missing consent information or inaccurate financial reporting data, allowing proactive mitigation.

- Continuous Feedback Loops: AI-driven governance integrates feedback from business outcomes back into data quality strategies, fostering ongoing alignment and refinement.

Result: Data quality governance evolves from a checkbox exercise into a strategic business enabler. Organizations no longer fix data problems reactively but proactively align data integrity with measurable business value, making governance more relevant and impactful.

Real-World Examples of AI-Powered Data Quality Management

AI-driven data quality management is rapidly transforming how diverse industries handle, validate, and optimize their data, leading to improved operational efficiencies and business outcomes. Below are concrete examples of how AI is applied to create AI-ready data in key sectors:

Retail: Personalization and Inventory Accuracy

In retail, maintaining clean, accurate customer and product data is crucial for targeted marketing and supply chain efficiency. AI systems automate the removal of duplicate customer records, unify fragmented purchase histories, and standardize data formats from multiple channels, both online and offline. This results in more personalized marketing efforts and prevents the costly mistake of sending redundant offers to the same customer. Additionally, AI analyzes point-of-sale data alongside external trends (such as holidays or weather) to continuously update inventory databases, ensuring retailers stock the right products in the right quantities to meet fluctuating demand.

Manufacturing: Enhancing Production Data Integrity

Manufacturers rely heavily on sensor data and operational logs to optimize production lines. AI models continuously monitor this data to detect anomalies such as sensor malfunctions, inconsistent measurements, or gaps in data streams that can compromise quality control and maintenance schedules. By flagging inaccurate or missing data, AI helps ensure that production reports reflect true operational conditions, enabling proactive maintenance and reducing downtime. Furthermore, AI helps reconcile supplier data and materials inventories to ensure components are accurately tracked, avoiding costly errors or delays.

Logistics & Transportation: Streamlining Fleet and Shipment Data

In logistics, data quality directly affects delivery performance and customer satisfaction. AI-powered tools cleanse and validate fleet data such as vehicle maintenance records, driver logs, and GPS tracking information to detect errors or outdated entries. For shipment tracking, AI algorithms harmonize data coming from disparate carriers and tracking systems, correcting inconsistencies in delivery status or location information. This results in more reliable tracking updates and fewer customer complaints.

Banking: Ensuring Compliance and Fraud Prevention

Banks handle massive volumes of sensitive data that must be accurate and secure. With the use of AI in data quality solutions, banks can continuously audit this vast amount of transactional data to identify inconsistencies, missing information, or duplicated records. Simultaneously, sophisticated anomaly detection models scan transactions in real-time to detect suspicious activities that may indicate fraud or money laundering. By maintaining high-quality data and enabling early detection of risks, AI helps banks comply with regulatory mandates and protect customer assets more effectively.

Energy & Utility: Improving Asset and Usage Data Reliability

In the energy and utilities sector, reliable data about infrastructure, consumption patterns, and grid performance is essential for operational efficiency and regulatory reporting. AI tools validate and reconcile data from smart meters, sensor networks, and maintenance logs, identifying anomalies like incorrect meter readings or delayed data uploads. This ensures billing accuracy and helps detect energy theft or equipment malfunctions early. Furthermore, AI integrates data from diverse sources such as weather forecasts and consumption trends to improve demand forecasting and grid management, enabling better resource allocation and sustainability initiatives.

Across industries, AI-powered data quality management is enhancing the accuracy, consistency, and completeness of data, turning raw information into a reliable asset. By automating error detection, standardization, and data enrichment, AI enables organizations to make informed decisions, meet compliance requirements, and deliver superior customer experiences in a data-driven world.

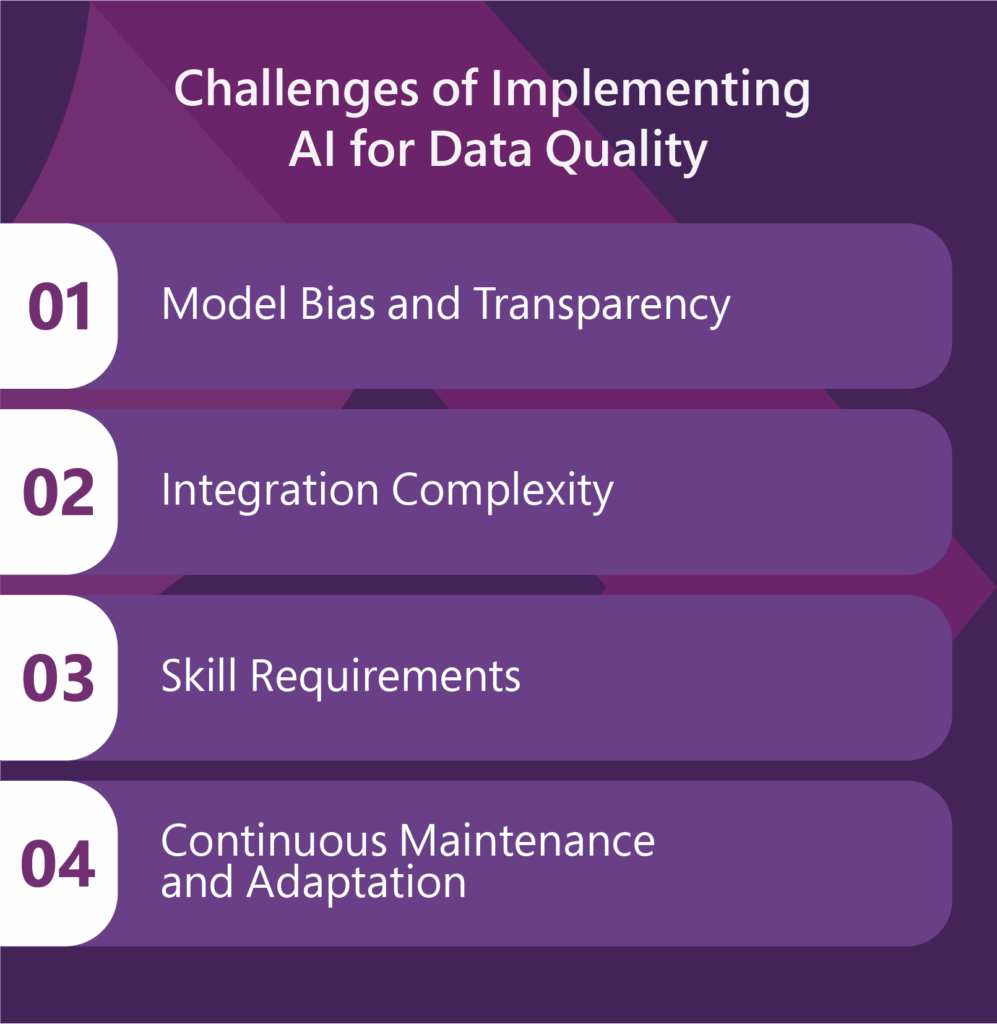

Challenges and Considerations in Implementing AI for Data Quality

While AI offers transformative potential in managing and enhancing data quality, organizations face several critical challenges when integrating AI-driven solutions. Addressing these challenges thoughtfully is essential to harness AI’s full benefits without unintended consequences.

1. Model Bias and Transparency

AI systems depend heavily on the data they are trained on. If the training datasets are incomplete, unbalanced, or skewed toward certain populations or scenarios, the models may develop biases that can compromise data quality decisions. For instance, an AI system trained primarily on data from one region may misclassify or mishandle data from another region, leading to inaccuracies. Hence, it becomes necessary for enterprises to ensure that their training data is diverse, representative, and carefully curated.

Most AI models, such as the deep learning ones operate as “black boxes,” producing results without clear reasoning. For data quality management, where decisions can affect compliance, reporting, or operational workflows, stakeholders need to understand why AI flagged certain data points or made specific corrections. Explainable AI techniques help build confidence among users and decision-makers by providing interpretable insights into the AI’s logic and behavior.

2. Integration Complexity

Most organizations have complex, heterogeneous data infrastructures, often consisting of legacy systems, multiple databases, and various data formats. Integrating AI for data quality in their existing data ecosystem requires careful architectural planning to ensure these tools can seamlessly communicate and operate with current workflows.

Without proper integration, AI models may face challenges in accessing real-time data or delivering outputs in usable formats, which can hinder their effectiveness. Additionally, poorly managed integration efforts risk disrupting ongoing business processes, causing downtime, or creating data silos. To mitigate these risks, organizations should adopt modular AI solutions designed for interoperability and plan gradual, well-tested deployments.

3. Skill Requirements

Successful AI adoption in data quality management demands a unique blend of skills. While data scientists and machine learning engineers bring the technical expertise to build, train, and deploy AI models, deep domain knowledge is equally vital. Understanding the specific nuances, rules, and critical quality indicators of the business domain allows AI to be tailored effectively.

For example, AI system designed for financial data quality must incorporate domain-specific validation rules and regulatory considerations that a generalist data scientist might overlook. Bridging the gap between technical and business teams is a common challenge; organizations often need to invest in cross-functional training and foster collaboration to ensure the AI solutions truly meet operational needs.

4. Continuous Maintenance and Adaptation

AI models are not “set-and-forget” solutions. Data environments are dynamic: new data sources emerge, business processes evolve, and external conditions change. As a result, such changes can degrade performance of AI over the time. To maintain accuracy and relevance, organizations need to monitor, retrain, and fine-tune their AI models from time to time.

This continuous maintenance ensures that the models remain aligned with current data characteristics and quality standards. It also includes updating training datasets with new examples and periodically reassessing the model’s assumptions. Organizations need to establish governance frameworks and allocate resources for sustained model lifecycle management to prevent performance deterioration and maintain trust in AI-powered data quality initiatives.

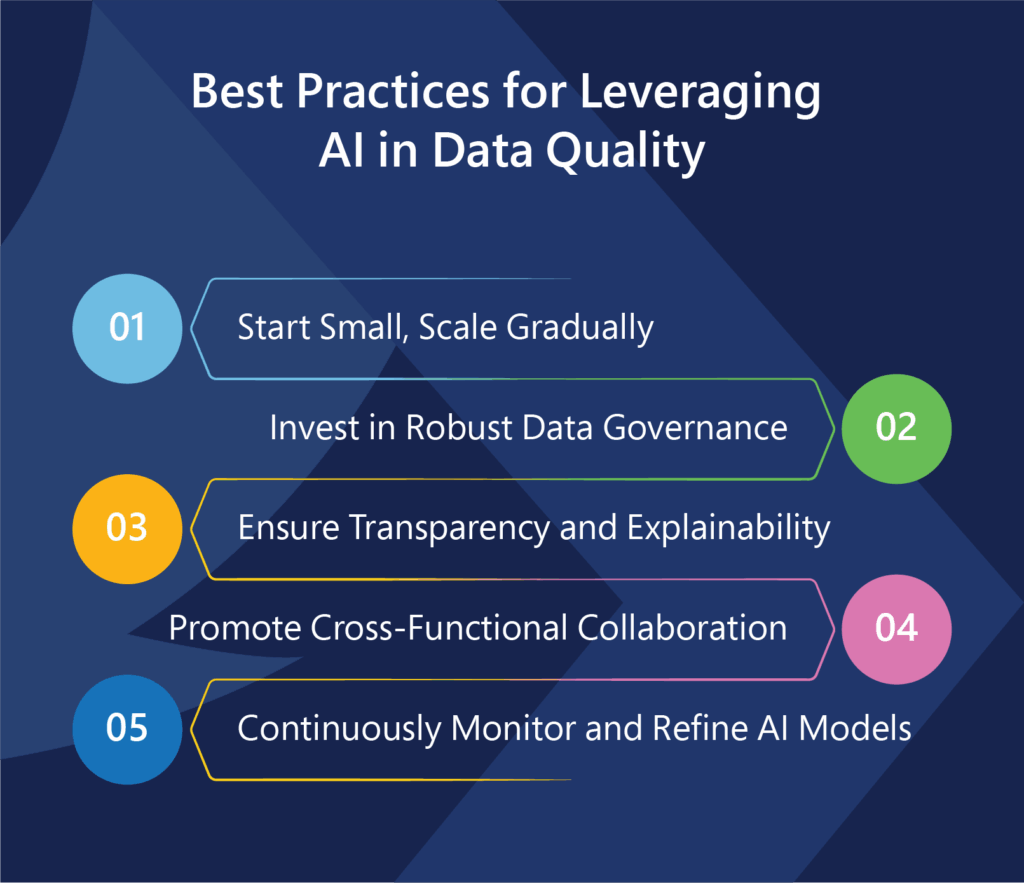

Best Practices for Harnessing AI in Data Quality Management

Successfully enhancing data quality for AI demands a strategic and disciplined approach. Organizations can unlock AI’s full potential while minimizing risks by adopting a set of proven best practices tailored to their unique environments and objectives.

1. Start Small, Scale Gradually

Rather than rushing into enterprise-wide AI deployments, it is wise to begin with targeted pilot projects focused on the most critical or high-impact datasets. This phased approach allows organizations to validate AI models’ effectiveness on manageable data volumes and identify potential pitfalls early.

Pilot tests provide valuable lessons about data readiness, model behavior, and integration challenges. Once these initial tests demonstrate clear improvements in data quality and operational efficiency, organizations can confidently expand AI initiatives incrementally, scaling up with refined processes and greater stakeholder buy-in.

2. Invest in Robust Data Governance

AI-powered data quality efforts thrive in environments with strong data governance frameworks. Hence, establishing clear ownership of data assets, along with well-defined policies and quality standards can help create a solid foundation for AI tools to operate effectively.

Governance ensures consistency in data definitions, formats, and access controls, which enhances the AI’s ability to detect anomalies and automate corrections accurately. Moreover, governance structures promote accountability, enabling swift resolution when AI flags data issues and facilitating collaboration between technical teams and business units.

3. Ensure Transparency and Explainability

Given that AI decisions can directly influence business outcomes and regulatory compliance, transparency is essential. Organizations should prioritize AI systems that offer interpretable outputs where the rationale behind data quality assessments or corrections is clear and accessible.

Explainable AI techniques, such as decision trees, rule-based models, or post-hoc interpretability methods, help demystify AI reasoning. Additionally, maintaining comprehensive audit trails of AI-driven changes fosters trust among stakeholders and provides traceability for compliance reviews or internal investigations.

4. Promote Cross-Functional Collaboration

Effective AI deployment in data quality management requires input from diverse perspectives. Engaging business users, data stewards, and subject matter experts early in the process ensures that the AI models align with real-world quality criteria and business priorities.

Further, collaborative efforts help define relevant validation rules, establish acceptable error thresholds, and interpret AI findings correctly. This involvement not only improves model accuracy but also facilitates user acceptance and empowers teams to act swiftly on AI-generated insights.

5. Continuously Monitor and Refine AI Models

AI models are dynamic assets that demand ongoing attention. Organizations must:

- Implement processes for continuous monitoring to detect when models begin to drift or underperform due to evolving data patterns.

- Run routine evaluation against fresh datasets and key performance indicators enables timely identification of issues.

- Retrain or fine-tune AI models with updated data to maintain their precision and relevance.

Conclusion: The Road Ahead for AI and Data Quality

As AI systems become an integral part of business operations today, the demand for quality data has become impeccable. Fortunately, AI itself offers the means to meet this demand, transforming data quality management from a manual, reactive chore into an intelligent, proactive discipline.

By harnessing AI’s ability to learn, adapt, and automate, organizations can unlock the true value of their data, building AI-driven systems that are not only powerful but also trustworthy and resilient. The journey is complex, requiring thoughtful implementation and ongoing governance, but the payoff is undeniable: superior data quality fueling superior AI performance and, ultimately, better outcomes for businesses and society alike.

At Quinnox, we help organizations navigate this journey with precision and purpose. Our AI and data services are designed to elevate data quality at every stage, ensuring that your data is not just AI-ready, but future-ready – paving the way for intelligent operations, actionable insights, and measurable impact.

Let us help you transform your data into a true competitive asset- one that powers resilient, scalable, and responsible AI. Reach our highly experienced and expert AI squad today!

FAQs Related to AI for Data Quality

AI plays a transformative role in data quality management by automating the detection, correction, and prevention of data issues at scale. Traditional data management relies heavily on manual checks and static rules, which are not only time-consuming but also prone to oversight.

AI introduces intelligent algorithms that learn from patterns within the data, enabling them to flag anomalies, fill in missing information, deduplicate records, and adapt to evolving data landscapes. Rather than being a one-time fix, AI supports continuous monitoring and improvement of data quality, making it a dynamic and proactive process rather than a reactive one.

AI improves data accuracy by automatically identifying incorrect or suspicious entries, such as out-of-range values, typos, or mismatches across datasets. It goes beyond static validation rules by using machine learning models that understand context and patterns like knowing when a transaction amount seems unusually high for a particular customer profile. For consistency, AI can cross-reference and reconcile data from multiple sources, ensuring that records align across systems. It can also standardize formats (e.g., dates, addresses, naming conventions) in real time, reducing inconsistencies that often arise in large, decentralized data environments.

Yes, AI can play a key role in uncovering the root causes behind recurring data quality problems. By analyzing historical trends, metadata, and the flow of data across systems, AI can pinpoint where breakdowns are happening whether it’s a faulty data entry process, a misconfigured integration, or a recurring error from a specific source.

AI-driven data quality management brings a range of strategic and operational benefits. First, it dramatically reduces the time and effort needed to clean and prepare data, which speeds up decision-making and lowers operational costs. Second, it improves the reliability of data used in analytics, reporting, and automation, leading to more accurate insights and better business outcomes. Third, AI makes it easier to scale data quality efforts across growing data volumes and complex systems. Ultimately, organizations that invest in AI for data quality gain a competitive edge by turning clean, trusted data into a driver of innovation, agility, and customer trust.