Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

The corporate world has witnessed a sobering reminder of what can go wrong when artificial intelligence (AI) is used without adequate oversight. A recent incident where a leading consulting firm faced financial penalties for using AI-generated content riddled with factual inaccuracies, fabricated citations, and even a misattributed legal quote, serves as a wake-up call, exposing the fragile balance between innovation and integrity.

As tools like ChatGPT and other generative AI platforms become woven into the fabric of modern business operations, their appeal and potential benefits are well proven. They promise efficiency, speed, and scale -capabilities that were once unimaginable. Yet, with this growing dependence comes an equally pressing responsibility: ensuring that these tools are used ethically, transparently, and in compliance with established standards.

Enterprises today stand at a crossroads where the unchecked use of AI could lead to reputational and regulatory fallout, while thoughtful governance could turn AI into a true strategic advantage. The challenge lies not in limiting AI’s potential but in embedding the right controls, accountability, and ethical considerations at every stage of its lifecycle.

This blog explores the critical need for AI governance in the wake of this recent AI fallout, outlining the key components of effective governance, the risks of neglecting it, and actionable steps enterprises can take to ensure responsible use of AI.

The Recent AI Fallout: A Wake-Up Call for the Industry

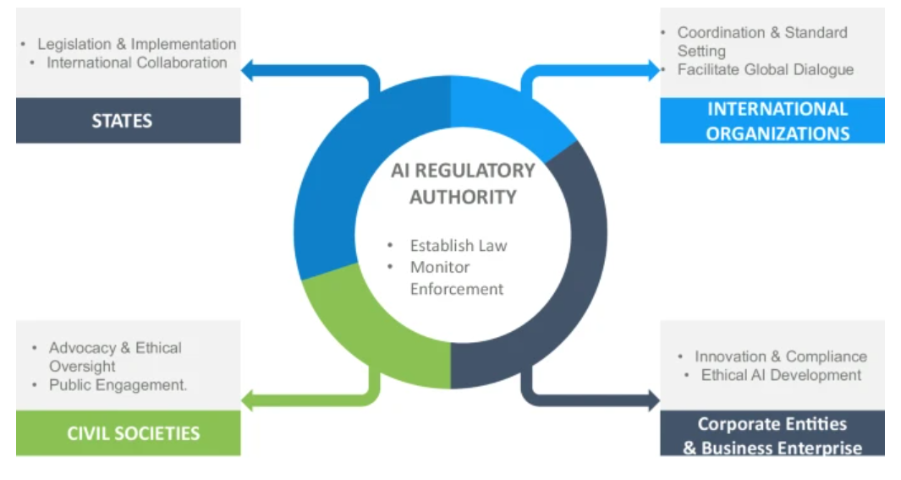

The fallout underscores a broader issue – enterprises must approach AI with a governance-first mindset, ensuring that every step of the AI lifecycle, from design to deployment, is closely monitored and controlled. The incident highlighted several core governance issues:

- Data Privacy Concerns: AI systems often require vast amounts of data to function effectively. Without proper safeguards, this data can be misused, leading to breaches of privacy or the inadvertent violation of regulations.

- Bias in AI Models: The AI system in question was found to exhibit biases that impacted decision-making processes. This issue underscored the challenges of ensuring that AI models are trained with diverse and representative data to avoid discriminatory outcomes.

- Lack of Transparency and Accountability: AI systems lacked sufficient transparency, with stakeholders unable to fully understand how decisions were being made. This led to calls for greater accountability and the establishment of clear guidelines for AI deployment.

The Case for AI Governance: Managing Risk and Unlocking Value

While AI holds tremendous potential to enhance business processes, boost efficiency, and drive innovation, the risks of unchecked use are equally significant. The recent incident clearly demonstrates that improper implementation can invite regulatory scrutiny, erode customer trust, and cause lasting reputational harm.

The role of AI governance is twofold:

- Mitigating Risks: Enterprises must manage the legal, ethical, and operational risks associated with AI. These include ensuring compliance with regulations, safeguarding against biases, protecting data privacy, and preventing misuse of AI technology.

- Optimizing AI Performance: Governance frameworks help businesses maximize the benefits of AI by ensuring the technology is applied in ways that align with business goals, ethical standards, and stakeholder expectations.

To achieve both of these objectives, organizations must establish clear guidelines and processes around AI usage. Governance must go beyond compliance checks, focusing on establishing a culture of responsible AI development and use.

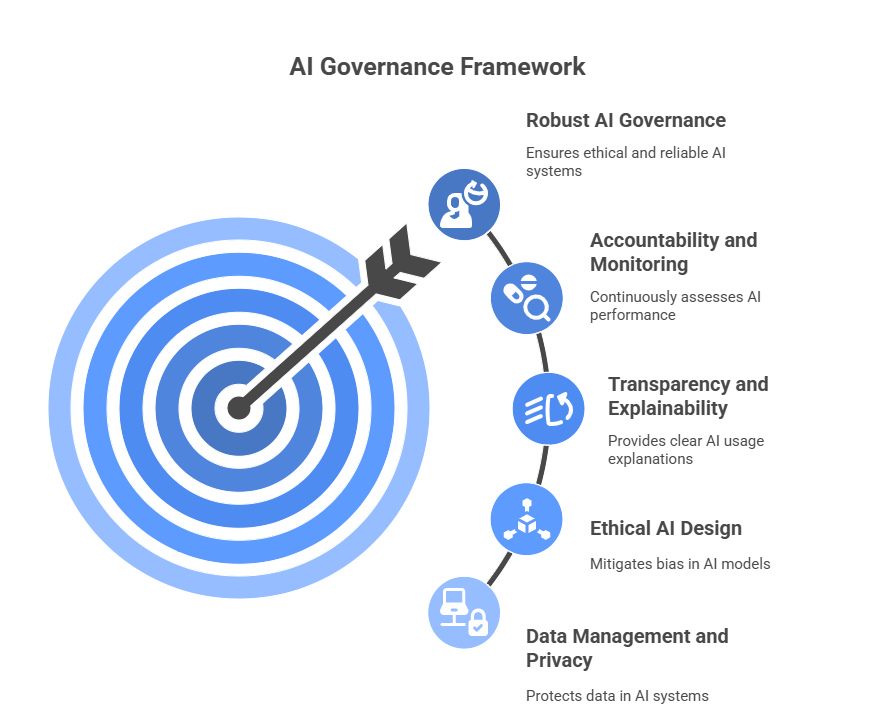

Key Components of a Robust AI Governance Framework

The data reconciliation process typically involves four key stages:

1. Data Management and Privacy Protection

At the heart of AI governance lies effective data management. AI systems rely on vast datasets, and these datasets often include sensitive information. Enterprises must ensure that data used to train AI models is accurate, comprehensive, and representative, and that it is collected and processed in compliance with privacy laws.

Key principles for managing data include:

- Data Minimization: Only collect data that is necessary for the purpose of AI deployment. Reducing unnecessary data collection mitigates privacy risks.

- Data Anonymization: Where possible, anonymize sensitive data to ensure individuals’ privacy is protected even if the data is compromised.

- Data Auditing: Establish regular auditing mechanisms to monitor how data is collected, stored, and used in AI systems. This helps maintain transparency and accountability.

2. Ethical AI Design and Bias Mitigation

Bias in AI models is one of the most pressing challenges facing businesses today. AI systems learn from historical data, and if that data contains biased patterns, the AI will replicate and amplify those biases. To ensure fairness, organizations must:

- Diversify Training Data: Ensure that AI models are trained on diverse, representative datasets. This helps mitigate the risk of biases that could affect marginalized or underrepresented groups.

- Bias Testing and Audits: Regularly audit AI models for signs of bias, ensuring that decision-making processes do not disproportionately impact any group based on gender, race, or other characteristics.

- Ethical Oversight: Implement ethical review boards or committees that provide oversight during AI development to assess the fairness and inclusivity of AI solutions.

3. Transparency and Explainability

Transparency and explainability are fundamental to building trust in AI systems. If stakeholders, including employees, customers, and regulators, cannot understand how an AI system makes decisions, the system may be seen as a “black box”, and this leads to distrust.

To ensure transparency, enterprises should:

- Develop Explainable AI Models: Use AI models that offer clear, interpretable outputs. This might include decision trees or simpler models that can be easily explained to non-technical stakeholders.

- Document AI Decision-Making: Maintain detailed records of how decisions are made within AI systems. This includes documenting the data sources, algorithms, and rationale behind AI-generated outcomes.

- Communicate Clearly: Provide users with clear, accessible explanations of how AI is being used and how decisions are made, particularly in industries like finance, healthcare, and public services where AI decisions can have significant consequences.

4. Accountability and Continuous Monitoring

AI governance does not end with the deployment of a system. Enterprises must continuously monitor AI performance to ensure that it operates as expected, adheres to ethical guidelines, and complies with regulations.

Key actions include:

- Establishing Accountability Structures: Assign accountability for AI governance within the organization, whether through a dedicated AI governance officer, compliance officer, or an AI ethics committee.

- Continuous Monitoring and Feedback Loops: Set up mechanisms to track AI behavior post-deployment, gathering feedback from stakeholders and adjusting the AI model as necessary to correct issues.

- Incident Management Protocols: Establish protocols for quickly addressing and mitigating any problems that arise with AI systems, including unintended consequences or operational failures.

Practical Steps for Enterprises to Strengthen AI Governance

- Develop a Comprehensive AI Governance Policy: A well-documented AI governance policy should outline the principles, processes, and responsibilities surrounding AI use within the organization. This policy should be reviewed and updated regularly.

- Engage in Ethical AI Training: Conduct regular training for AI developers, business leaders, and stakeholders on ethical AI practices, data privacy regulations, and the importance of bias mitigation.

- Implement a Risk Management Framework: Enterprises should adopt a comprehensive risk management framework for AI, assessing potential risks at every stage of the AI lifecycle, from data collection to model deployment.

- Foster Collaboration Between AI and Compliance Teams: AI teams should work closely with compliance, legal, and ethics teams to ensure alignment on regulatory requirements and ethical standards.

- Ensure External Audits and Third-Party Reviews: Independent audits can provide an external perspective on AI systems, identifying potential issues with data handling, algorithmic fairness, and compliance.

Conclusion: Moving Forward with Responsible AI

The recent AI fallout serves as a potent reminder that AI is not without its risks, and enterprises must be proactive in managing those risks through strong governance frameworks. By embedding governance at every stage of the AI lifecycle, businesses can not only mitigate potential pitfalls but also unlock the true value of AI in an ethical and responsible manner.

By prioritizing data management, ethical design, transparency, accountability, and continuous monitoring, enterprises can navigate the complexities of AI deployment and ensure that they are using this powerful technology for the benefit of all stakeholders.

Still unsure and worried about your AI initiatives? You can explore Quinnox AI (QAI) Studio– a one-stop AI innovation hub that helps in responsible AI development and deployment. QAI Studio enables enterprises to design, test, and govern AI models with built-in compliance, auditability, and ethical safeguards. Its robust governance tools, transparency features, and lifecycle management capabilities help organizations operationalize AI responsibly, ensuring innovation aligns with regulatory expectations and corporate values.

Krishna Kumar is an AI innovation leader with over 24 years of deep expertise in applying artificial intelligence to solve real-world business challenges. His insights bridge the gap between cutting-edge innovation and practical enterprise value, offering readers a forward-looking perspective on the evolving AI landscape. Through his blogs, he brings a wealth of strategic knowledge shaped by decades of experience. Beyond technology, Krishna finds creative expression in photography.

FAQs on Data Reconciliation

Data reconciliation ensures that data transferred or integrated between systems remains accurate, complete, and consistent. It is essential for maintaining business integrity, compliance, and trust in enterprise data.

The process includes extraction, comparison, discrepancy identification, resolution, and validation. Each step ensures the target dataset mirrors the source accurately.

Common techniques include record count validation, field-level comparison, checksum verification, sampling analysis, and automated exception reporting.

It validates that all data from the legacy system is correctly migrated, preventing loss or corruption. It also supports compliance and operational continuity.

Follow standardized frameworks, automate comparisons, maintain audit trails, and focus on continuous improvement. Integrating reconciliation into broader Data migration validation best practices ensures accuracy and efficiency.