Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

It’s 2025, and AI is everywhere. From executive suites to customer service centers, manufacturing plants to financial trading floors, artificial intelligence has stopped being just an experiment; it’s become the driving force behind business innovation. It detects fraud in real time, automates complex decision-making, tailors customer experiences down to the individual, anticipates supply chain disruptions before they happen, and even guides strategic leadership at the highest levels.

In fact, 84% of global organizations are either using or planning to adopt AI within the next 12 months, but amid the rapid deployment, here’s what’s quietly being overlooked: the data feeding these models.

Behind every sophisticated AI model lies an ocean of data – and if that data is biased, outdated, or poorly handled, no amount of model brilliance will save you. We’re already seeing the consequences – misleading outputs, customer backlash, and regulatory red flags.

This is where Data Governance for AI steps in – not as an afterthought or compliance tick-box, but as a mission-critical enabler of trustworthy, scalable, and future-ready AI. If your data isn’t governed, it isn’t AI-ready. It’s that simple. And while some organizations are still figuring this out, others are already putting strong governance in place; quietly building smarter, safer systems that won’t fall apart at scale.

So, before you plug in that next LLM or launch your AI assistant, pause and ask: Are we governing the data behind our decisions? If not, this is the moment to start. So, Let’s dive in.

What is Data Governance for AI?

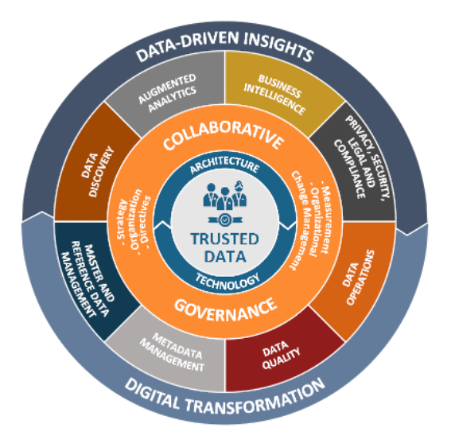

Source: https://www.firstsanfranciscopartners.com/

Data governance for AI refers to the application of governance principles to the unique demands of AI development and deployment. It includes policies, controls, technologies, and workflows that ensure AI systems are built on high-quality, secure, traceable, and ethically sourced data.

Unlike traditional data governance, which mostly addresses structured data for business intelligence or reporting, AI data governance must handle a broader variety of data types – unstructured text, real-time streams, synthetic data, and third-party datasets. It must also account for how data is collected, labeled, processed, stored, and reused throughout the AI lifecycle.

At its core, it is about building transparency and accountability into both the data pipeline and the AI models themselves. Without this foundation, AI outcomes cannot be trusted.

Traditional Data Governance vs AI-Driven Governance

| Feature | Traditional Data Governance | AI-Driven Data Governance |

|---|---|---|

| Focus | Structured data, reporting, compliance | Unstructured & real-time data, model training, explainability |

| Goal | Accuracy, compliance, data reuse | Model fairness, trust, regulatory readiness |

| Scope | Data quality, cataloging, access control | Data lineage, annotation standards, AI ethics, risk monitoring |

| Stakeholders | IT, compliance, data stewards | Data scientists, ML engineers, legal, ethics teams |

AI’s Rapid Growth and the Need for Governance

The pace of AI adoption in enterprises is nothing short of explosive. According to McKinsey’s State of AI report, 79% of companies have now integrated AI into at least one function. Meanwhile, generative AI has seen a breakout year: usage jumped 12 percentage points year-over-year, with over 55% of organizations actively experimenting or scaling GenAI solutions across departments.

Large language models (LLMs) are leading this charge. What began as pilot projects in 2023 have now evolved into production-level deployments powering customer service, code generation, marketing content, and decision intelligence. IDC projects global spending on AI systems to reach $500 billion by 2027, reflecting AI’s growing role in business-critical operations.

But while adoption surges, governance lags. Nearly 1 in 2 companies admit they lack a clear AI strategy or implementation roadmap, and only 1% say their generative AI initiatives are fully mature (BCG x MIT Sloan 2023 Report).

The root issue? Data. Despite AI’s hunger for data, many organizations struggle to source, clean, and label high-quality datasets. In fact, data bottlenecks have increased by 10% year-over-year, while data accuracy has declined by 9% since 2021 (Global Newswire). Without reliable data foundations, even the most advanced models falter.

The cost of poor data governance is staggering. Gartner estimates that bad data costs organizations an average of $12.9 million annually in wasted resources, failed projects, and reputational damage. It also reduces workforce productivity by up to 20% and inflates operational costs by as much as 30% (Harvard Business Review).

In short, AI’s growth story is being held back by a silent bottleneck – data governance. Without it, organizations risk building powerful systems on unstable ground. With it, they unlock scalable, ethical, and value-driven AI daily.

For a deeper look into how regulations are evolving globally, check out our blog on navigating the evolving landscape of AI regulations.

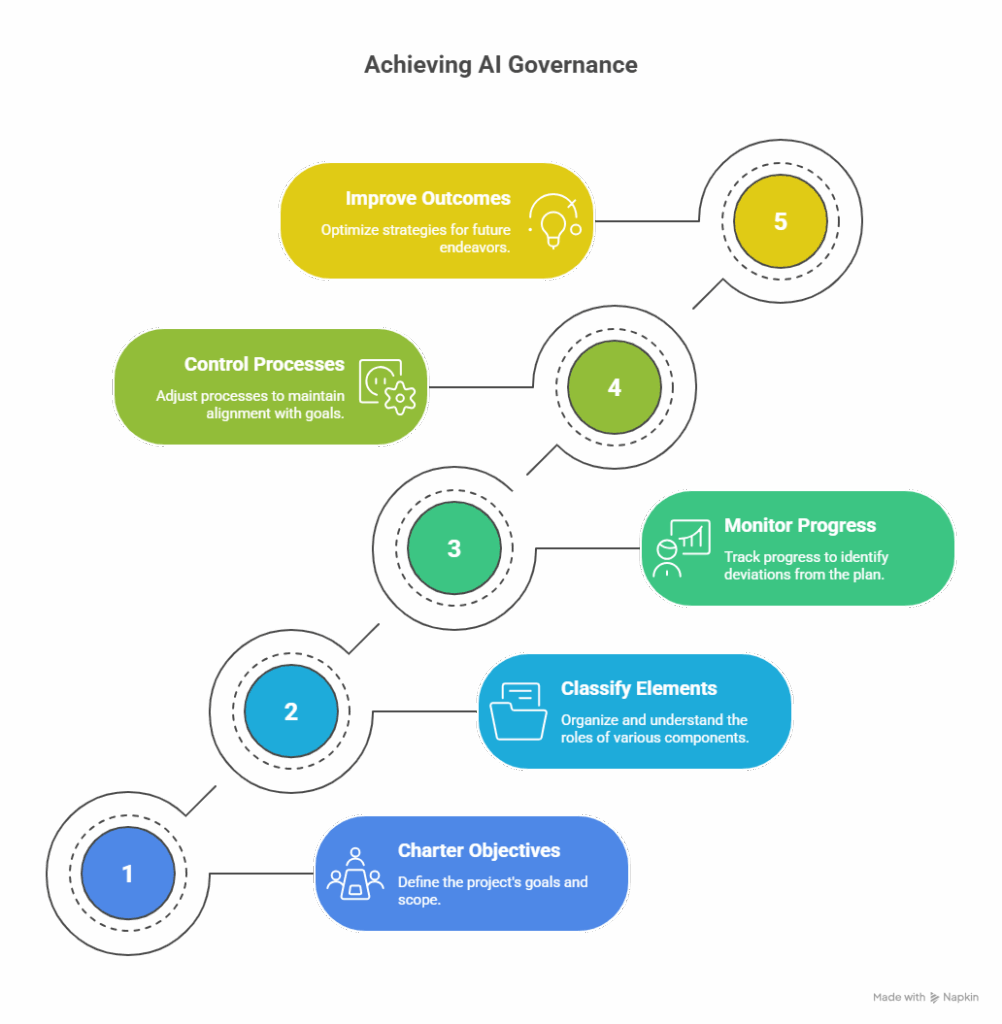

A Compact 5-Step Framework for Data Governance in AI

As AI models become increasingly central to business and decision-making, the data feeding them needs to be governed with more than just traditional policies.

Here’s a streamlined, future-ready framework that enterprises can adopt to bring clarity, compliance, and control to their AI data lifecycle.

1. Charter: Establish Governance with AI in Mind

Begin with a clear governance charter that defines responsibilities across teams – from data science to legal and compliance. This charter should address AI-specific risks like model hallucinations, bias, and input manipulation (e.g., prompt injection in GenAI). Everyone touching AI data must be accountable for its integrity and ethical use.

2. Classify: Know Your Data Before You Use It

Data classification is foundational. Use metadata tagging and automated tools to identify PII, sensitive financial data, or unregulated third-party inputs. For GenAI, this also means vetting training sources to avoid copyright issues or harmful content. Only 23% of organizations have full visibility into their AI training data, according to McKinsey.

3. Control: Apply Guardrails to Who Uses What and How

Implement AI-specific access controls, including role-based permissions and prompt filters. Prevent misuse through input sanitization, data minimization, and secure handling of training pipelines and logs. According to Gartner, 70% of AI data leaks stem from weak access governance –a reminder that control must extend beyond storage.

4. Monitor: Make AI Data Transparent and Traceable

Track how data flows, how models perform, and where bias or drift creeps in. Use audit trails and explainability tools to ensure accountability. With the EU AI Act and similar regulations on the rise, real-time monitoring and event logging are fast becoming non-negotiable compliance requirements.

5. Improve: Adapt as Risks and Regulations Evolve

AI doesn’t stand still – neither should your governance. Use audits, incident reports, and regulatory updates to continually refine policies and tooling. Deloitte study found that enterprises with iterative AI governance models are 2.3x more likely to meet regulatory compliance efficiently.

Top Challenges in Data Governance for AI

1. Bias and Fairness in Training Data

AI models learn patterns from the data they’re trained on. If that data contains historical biases – based on race, gender, geography, or socioeconomic status – the AI will not only replicate but often amplify them.

A IBM study found that 68% of business leaders are concerned about bias in AI outputs, yet only 35% have mechanisms in place to actively detect or mitigate it.

How to Fix It:

- Use diverse and representative training datasets

- Apply pre-processing de-biasing techniques (like reweighting or resampling)

- Conduct regular fairness audits using tools

- Establish an AI Ethics Review Board to assess use cases from multiple perspectives

2. Lack of Data Lineage and Traceability

In AI systems, data flows through many hands – sourced from multiple locations, transformed in pipelines, and used in training, testing, and deployment. If you can’t trace how data evolved, you can’t explain or trust the outcome. Only 30% of organizations have full visibility into their AI data pipelines and lack of lineage is one of the top reasons AI audits fail.

For Instance, a financial institution couldn’t explain why its AI model denied a loan. Turns out, an outdated third-party dataset had been silently introduced weeks earlier, skewing credit scoring.

How to Fix It:

- Implement automated data lineage tools

- Maintain versioned datasets and keep change logs of transformation scripts

- Adopt model cards and data datasheets to capture metadata and source details

- Ensure traceability is auditable and reportable – especially under laws like GDPR and the EU AI Act

3. Siloed Data Across Systems

AI thrives on integrated data, but most enterprises are still working with fragmented systems. CRM data in one silo, IoT data in another, and unstructured support tickets somewhere else. This lack of a unified data layer leads to inconsistencies, governance gaps, and poor model performance.

For Instance, a retail company building a customer behavior model missed 40% of relevant interactions because chat and email data were stored in isolated systems, outside of the main customer data platform.

How to Fix It:

- Invest in a centralized data lakehouse or fabric architecture

- Use ETL/ELT pipelines to consolidate structured and unstructured data

- Apply enterprise-wide governance policies to every integrated source

- Introduce data stewards for cross-functional coordination

4. Governance of Generative AI Models

LLMs and other generative models require massive, often opaque datasets for training. These models can inadvertently produce toxic, plagiarized, or even harmful content if not properly governed. If a company’s GenAI-powered support bot began suggesting incorrect medical advice due to unfiltered training data scraped from the web.

How to Fix It:

- Curate training data sources – exclude forums, unverified content, or datasets with harmful speech

- Use content moderation filters and toxicity classifiers

- Monitor prompts and outputs using prompt injection detection tools

- Implement output logging and usage throttling to prevent misuse

Related Article: 5 best practices to ensure AI compliance

5. Rapidly Evolving Compliance and AI Regulations

AI governance is a legal moving target. From GDPR to the EU AI Act, India’s Digital Personal Data Protection Act, and the US AI Bill of Rights – enterprises are juggling multiple frameworks that evolve constantly. Gartner predicts that by 2026, 50% of companies will have formal AI risk management programs, up from just 10% in 2023.

How to Fix It:

- Align governance programs with global standards like ISO/IEC 42001 and NIST AI RMF

- Automate consent management and data subject rights workflows

- Maintain a compliance dashboard to track data usage across jurisdictions

- Establish a regulatory watch team for horizon scanning and policy updates

6. Transparency and Explainability Gaps

Many AI models, especially deep learning or transformer-based ones are black boxes. Business leaders, users, and regulators all want to know: Why did the model make that decision? If you can’t answer, trust and adoption plummet.

How to Fix It:

- Use explainable AI (XAI) techniques or counterfactual explanations

- Create model documentation (model cards, data statements, ethics assessments)

- Embed human-in-the-loop review steps for high-impact decisions

- Include business context and confidence scores in model outputs

Best Practices in AI Data Governance

To operationalize trustworthy and scalable AI, organizations must move from ad-hoc rules to structured, enterprise-wide governance.

The following six best practices represent a modern, forward-looking governance playbook for 2025 and beyond:

1. Adopt a Unified Governance Framework

The first step is to consolidate data quality, privacy, compliance, ethics, and model risk in one enterprise-wide policy. Many organizations continue to treat these domains in silos, creating fragmented oversight and operational friction.

A consolidated framework ensures that governance isn’t just reactive but embedded into design. This involves collaboration between legal, compliance, data science, and business leadership to define clear responsibilities and thresholds for acceptable AI behavior.

Forward-thinking companies align their governance models with global standards such as the NIST AI Risk Management Framework or the newly formalized ISO/IEC 42001:2023, which provides a structured approach for AI management systems.

Discover why AI data quality is the key to unlocking AI success and how poor data can silently derail even the most advanced AI systems.

2. Automate Metadata and Lineage Tracking

In the AI lifecycle, where data flows across distributed architectures, cloud platforms, and hybrid environments, manual tracking becomes quickly outdated. Automated lineage solutions not only capture the origins, transformations, and destinations of datasets but also enhance auditability and compliance readiness.

According to a Gartner report, by 2026, 60% of large enterprises will have deployed data lineage tools to address regulatory and operational risk – up from just 20% in 2023. Platforms like Qinfinite are being adopted to enable dynamic, real-time visibility across data pipelines and AI models. Define Access Controls and Permissions

In 2024 alone, over 30% of reported data breaches stemmed from insider threats or accidental leaks, according to IBM’s “Cost of a Data Breach” report.

To mitigate this, enterprises are implementing strict, role-based access policies across their AI training pipelines and datasets. These policies ensure only authorized personnel can interact with sensitive data or initiate model retraining. Moreover, access logs and periodic audits help enforce accountability and flag unusual behavior.

3. Govern Generative AI Use Cases

Generative AI adds a new layer of complexity to data governance. These models are trained on massive, often opaque datasets scraped from the open web – raising risks around misinformation, toxicity, and intellectual property violations. Enterprises must now put rigorous safeguards in place to vet training sources, apply content moderation, and prevent harmful outputs.

For example, organizations are increasingly using prompt filtering, toxicity detection APIs and even proprietary guardrails for LLM applications. Failure to do so can result in reputational damage, as seen in multiple cases where chatbots generated offensive or misleading content. A 2024 McKinsey study found that 42% of enterprises deploying GenAI cited “content integrity and governance” as one of their top three operational risks.

4. Continuously Monitor AI Outcome

Unlike static software, AI models degrade over time – a phenomenon known as model drift. If not detected early, drift can lead to inaccurate predictions or unfair outcomes, especially in regulated sectors like finance or healthcare. Enterprises are now embedding tools for real-time monitoring of model behavior, bias, and performance deviation.

According to a State of AI Governance survey, 57% of respondents have implemented some form of bias detection, while 45% use drift monitoring tools integrated into MLOps pipelines. For high-impact decisions, organizations also include human-in-the-loop oversight, where experts validate AI outputs before they are acted upon.

5. Educate Stakeholders on Responsible AI

Perhaps the most overlooked – but vital – best practice is educating stakeholders on responsible AI. Governance is not just about tools and policies; it’s about people. From developers and data scientists to product managers and C-suite executives, everyone must understand their role in stewarding AI responsibly. Leading organizations are institutionalizing this through continuous education programs, scenario-based workshops, and published guidelines that reinforce ethical practices.

Salesforce, for example, launched an internal “AI Ethics Bootcamp” for its employees in 2024 to promote responsible development practices – a move that has since been emulated by others in the industry.

Together, these best practices form the bedrock of a resilient and agile governance strategy – one that not only mitigates risks but builds stakeholder trust, regulatory alignment, and long-term AI sustainability.

A Look Ahead: What’s Next?

With the EU AI Act going live and regulations expected to tighten globally, we will see data governance evolve into a default capability. AI governance will soon be treated on par with cybersecurity and financial auditing. In fact, Gartner predicts that by 2026, 50% of large enterprises will have formal AI risk management programs in place, up from less than 10% in 2023. Similarly, IDC forecasts the global AI governance software market to cross $5 billion in value by 2027.

Governments in the US, UK, India, and Australia are also drafting AI-specific regulatory frameworks. Proactive organizations are already aligning with international standards such as ISO/IEC 42001 and the NIST AI Risk Management Framework to get ahead of compliance demands.

For businesses, this is both a challenge and an opportunity. Those who embed governance into their AI strategy now will:

- Innovate with confidence

- Meet regulatory expectations

- Reduce reputational and legal risks

- Improve stakeholder trust and adoption

- Differentiate their brand as a responsible AI leader

Final Thoughts

AI might move at the speed of innovation but trust still moves at the speed of governance.

In 2025 and beyond, the real differentiator in AI isn’t just speed or scale – it’s accountability. And that starts with the data. Strong data governance is no longer a backend compliance task; it’s the frontline enabler of ethical, explainable, and enterprise-grade AI.

Done right, it doesn’t slow you down – it clears the runway for faster, safer innovation. It ensures that every insight generated, every model deployed, and every decision made with AI is backed by quality, fairness, and transparency.

And that’s exactly where Quinnox’s intelligent application management platform, Qinfinite comes in. Qinfinite embeds governance into the very DNA of your AI workflows – automating lineage, securing access, monitoring bias, and ensuring compliance at scale. It’s governance that doesn’t just protect your data – it powers your AI advantage. Because when your data is trusted, your AI can be too. Ready to lead with confidence?

Talk to our experts and see how Quinnox can help govern your AI, responsibly.

FAQs About Application Testing

Data governance for AI refers to the policies, processes, and technologies that ensure data used in AI systems is accurate, secure, ethical, and compliant. It’s critical because AI outcomes are only as trustworthy as the data that powers them.

AI can automate data classification, detect anomalies, monitor compliance, and track data lineage in real-time—making governance more scalable and adaptive across large, complex data ecosystems.

Key challenges include managing bias in training data, tracking data provenance, integrating siloed systems, ensuring regulatory compliance, and maintaining transparency in black-box AI models.

Start with a unified governance framework, automate metadata tracking, enforce access controls, vet training data, monitor model outcomes, and educate stakeholders on responsible AI use.