Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

In a world where businesses operate at lightning speed, decisions are made in milliseconds, and machines predict customer needs before they even arise. This is the reality AI infrastructure is unlocking today. With 81% of executives prioritizing AI adoption, it’s clear that AI is no longer a futuristic vision, but it’s the backbone of modern enterprises. (Flexential Report)

Take Amazon for example – its AI-powered supply chain optimizes inventory management, reducing delivery delays by 30% and saving billions annually. Meanwhile, JPMorgan Chase employs AI-driven fraud detection to analyze 5,000+ variables per transaction, slashing fraudulent losses by 40%.

But here’s the challenge – 44% of organizations struggle with outdated IT infrastructure, limiting their ability to scale up AI solutions. Without robust computing power, seamless networking, and scalable storage, AI initiatives face bottlenecks and inefficiencies.

So, how can businesses build an AI infrastructure that delivers speed, agility, and accuracy? In this blog, we explore key components, best practices, and implementation strategies to help companies harness AI’s full potential.

A recent survey found that 48% of M&A professionals are now using AI in their due diligence processes, a substantial increase from just 20% in 2018, highlighting the growing recognition of AI’s potential to transform M&A practices.

A Deep Dive into AI Infrastructure

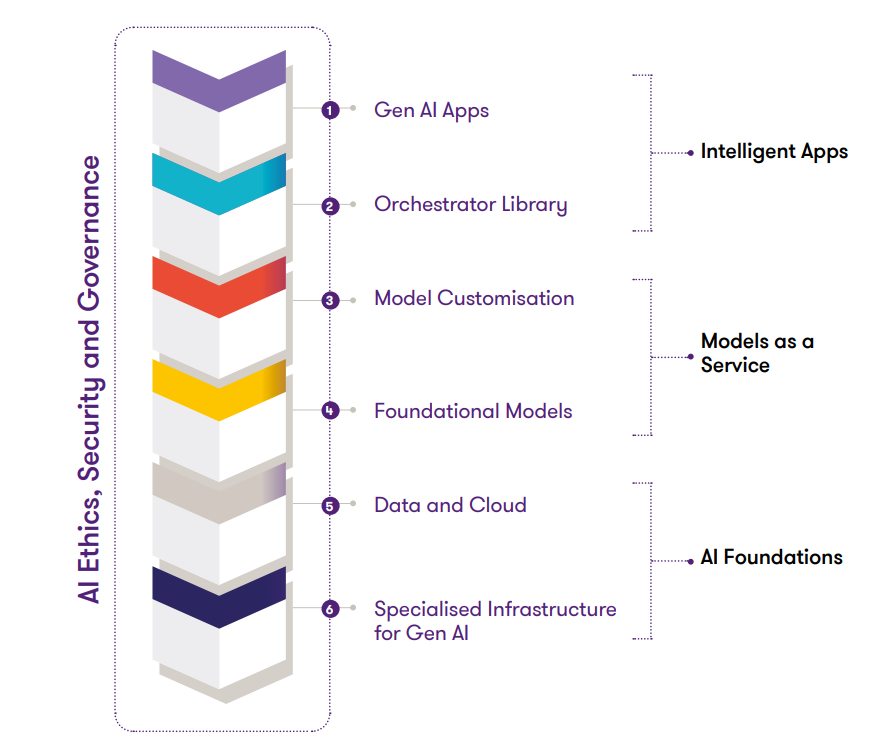

AI infrastructure is the foundation that supports artificial intelligence applications, enabling them to process vast amounts of data efficiently. It integrates hardware, software, networking, and data management solutions to optimize AI workloads, ensuring scalability, speed, and compliance.

A well-structured AI infrastructure ensures seamless data flow for AI models, efficient computing power to process complex algorithms, scalable solutions for handling increasing AI demands, and secure and compliant frameworks for AI governance.

With 90% of enterprises deploying generative AI, the demand for reliable AI infrastructure is skyrocketing, pushing organizations to upgrade their capabilities.

How AI Infrastructure Works

AI infrastructure operates in a systematic manner to facilitate the lifecycle of AI models – from training to deployment. Here’s how it works:

1. Data Acquisition & Storage

- AI models require diverse datasets, stored in structured or unstructured formats using databases, data lakes, and cloud storage.

- High-performance storage solutions ensure rapid access to large datasets, reducing latency in model training.

2. Preprocessing & Transformation

- Raw data undergoes cleaning, feature extraction, and transformation to enhance usability.

- AI frameworks integrate automated data pipelines for seamless preprocessing.

3. Computational Processing

- AI workloads require high computational power, often relying on GPUs, TPUs, or distributed computing environments.

- Parallel processing enables efficient handling of deep learning models.

4. Model Training & Optimization

- AI models are trained using algorithms and neural networks, optimizing parameters for accurate predictions.

- Continuous monitoring refines model performance, reducing bias and improving accuracy.

5. Deployment & Inference

- Once trained, models are deployed in production environments, integrated into applications or APIs.

- AI infrastructure ensures real-time inference capabilities, making intelligent decisions on incoming data.

6. Security & Compliance

- AI frameworks adhere to industry regulations (GDPR, HIPAA) and implement encryption, access controls, and ethical AI guidelines to prevent data breaches and bias.

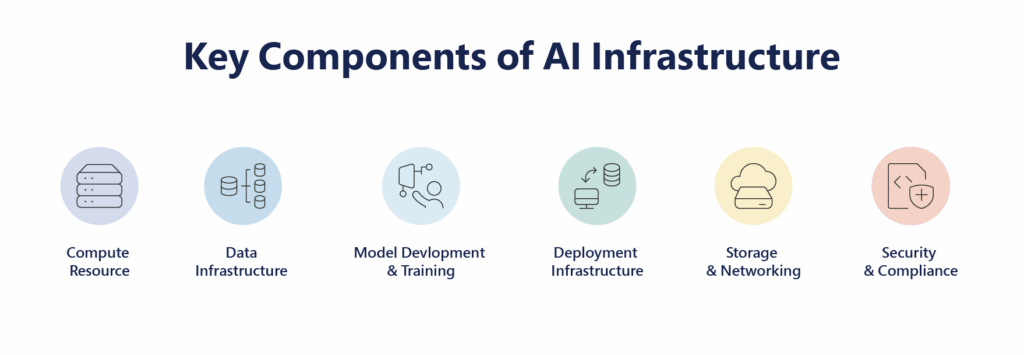

Key Components of Modern AI Infrastructure

Building a high-performance AI infrastructure is like assembling a symphony of specialized tools and technologies—each playing a distinct role to ensure data flows seamlessly, models train faster, and predictions are served reliably.

Here’s a deep dive into the foundational components that power enterprise-grade AI systems:

1. Compute Resources (CPUs, GPUs, TPUs)

AI workloads, especially deep learning – demand high computational power to process vast datasets efficiently. The right compute architecture can reduce model training time from weeks to hours, enabling faster AI innovation.

- GPUs (Graphics Processing Units) are the gold standard for AI training due to their parallel computing ability. A single high-end NVIDIA A100 GPU can deliver up to 20x faster performance than a CPU for AI tasks.

- TPUs (Tensor Processing Units), developed by Google, are designed specifically for machine learning and excel at matrix-heavy operations. Google uses TPUs to power products like Google Translate and Gmail’s smart reply.

- Edge processors are compact compute units embedded in IoT devices or autonomous systems. For example, Tesla’s Full Self-Driving computer leverages edge AI to make real-time driving decisions without depending on cloud latency.

2. Data Infrastructure (Lakes, Pipelines, Warehouses)

Without a robust data foundation, AI models lack context and accuracy. AI infrastructure must support scalable data storage, processing, and accessibility.

- Data Lakes for storing unstructured and semi-structured data.

- Data Warehouses for structured, analytics-ready data.

- ETL/ELT Pipelines for data transformation and enrichment.

- Real-Time Streaming for time-sensitive data.

- Metadata & Lineage Tools for data tracking and governance.

According to McKinsey, companies investing in AI-powered data infrastructure see 2.5x higher returns on AI initiatives

For example, a retail company using AI to recommend products needs its customer purchase history, browsing behavior, and inventory data all flowing smoothly into its model. Without a solid data infrastructure, AI insights are often inaccurate or delayed

3. Model Development & Training Environments

Building and training AI models requires sophisticated development environments that enable collaboration, experimentation, and performance tracking. Machine learning frameworks offer libraries and modules for creating a wide range of AI models. These frameworks are supported by development tools which provide interactive environments where data scientists can iterate quickly and visualize results in real time.

As model complexity grows, so does the need for distributed training environments. For instance, OpenAI trained GPT-4 using distributed compute clusters running thousands of GPUs in parallel, an approach that would be infeasible without optimized training orchestration.

According to Stanford AI Index Research, AI model training time has been reduced by 80% in the last five years due to advancements in distributed computing.

4. Deployment Infrastructure (Inference Engines + CI/CD for AI)

After a model is built, it needs to be deployed – meaning it must be made available to real users or systems to make decisions in real-time. This is where deployment infrastructure comes in. It allows teams to take their models and embed them into applications or devices where they can generate predictions or insights on demand.

Core Components include:

- Model Serving Platforms

- Model Versioning & Rollback that ensures accuracy and adaptability

- API Gateways which expose inference endpoints for applications

- CI/CD Pipelines for MLOps

According to Gartner, AI inference workloads account for 60% of cloud computing costs for enterprises

5. Storage & Networking

AI workloads demand high I/O throughput and reliable data movement – especially during model training and inference.

- High-Performance Storage: NVMe SSDs and distributed file systems ensure low latency and high bandwidth.

- High-Speed Networking: Technologies like InfiniBand and 5G (for edge use cases) reduce latency and enhance model training times.

- Hybrid/Multi-Cloud Architecture: Flexibility to move and access data across on-prem, cloud, and edge environments. This is especially critical for multinational enterprises with data residency laws.

For Instance, AI-powered content recommendation systems (Netflix, YouTube) rely on real-time data pipelines and high-throughput storage.

6. Governance, Security & Compliance

AI systems often touch sensitive or regulated data. Ensuring secure access, fairness, and compliance is essential to avoid reputational or legal risks.

Key governance capabilities:

- Encryption: Both at-rest and in-transit to protect data integrity

- Access Control: Role-based access (RBAC), audit logs, and authentication

- Bias & Fairness Audits: Regular evaluation of models for bias (gender, race, etc.)

- Explainability Tools: To provide transparency and traceability in model decisions

- Compliance Frameworks: GDPR, HIPAA, ISO 27001 must be embedded into infrastructure design

Together, these components create a scalable, flexible, and resilient environment capable of supporting sophisticated AI applications across industries.

CTA: Related Content: Navigating AI Governance: The Imperative of Ethical and Responsible AI

Challenges in Scaling AI Infrastructure

AI infrastructure faces multiple hurdles as businesses attempt to scale AI solutions efficiently. Some key challenges include:

- Computational Power Constraints: AI workloads demand high-performance hardware, often requiring specialized GPUs and TPUs.

- Infrastructure Costs: Expanding AI infrastructure involves significant investment in cloud computing, storage, and networking.

- Talent Shortage: A lack of experienced AI engineers and data scientists remains a major barrier for enterprises.

- Leadership Support: AI adoption requires strategic alignment and executive buy-in to drive innovation.

The image below visually represents these challenges, offering insights into how organizations navigate AI infrastructure scalability. (Source: ClearML Research)

Comparison Table: AI Infrastructure vs. Traditional IT Infrastructure

Feature |

AI Infrastructure |

Traditional IT Infrastructure |

| Computational Power | GPUs, TPUs, AI accelerators | CPUs |

| Data Processing | Real-time streaming, parallel processing | Batch processing, sequential execution |

| Scalability | Elastic cloud computing, distributed systems | Fixed resources, on-premises servers |

| Networking | Low-latency, high-bandwidth (InfiniBand, RDMA) | Standard Ethernet, moderate bandwidth /td> |

| Storage | Distributed, scalable storage (Data lakes, object storage) | Centralized databases, structured storage |

| Security & Compliance | AI-specific governance, ethical AI frameworks | Standard IT security protocols |

| Energy Efficiency | Optimized for AI workloads, high power consumption | General-purpose computing, lower power needs |

Benefits of AI Infrastructure

AI infrastructure is transforming industries by enhancing efficiency, scalability, and decision-making. Businesses investing in AI infrastructure experience higher productivity, cost savings, and competitive advantages.

1. Increased Computational Efficiency

AI models require high-performance computing (HPC) to process vast datasets. With AI workloads consuming 10x more computing power than traditional IT applications, enterprises are shifting to GPUs, TPUs, and AI accelerators for faster processing.

2. Cost Reduction & Operational Efficiency

AI-driven automation reduces manual labor costs and streamlines operations. According to Grant Thornton Research, AI-powered automation can cut operational expenses by 30-50%, improving overall efficiency.

3. Enhanced Scalability

With 90% of enterprises deploying AI-specific infrastructure, businesses can scale AI applications seamlessly (AI Infrastructure Alliance). Cloud-based AI solutions allow organizations to expand computing power on demand, eliminating infrastructure bottlenecks.

4. Improved Decision-Making

AI infrastructure enables real-time analytics, helping businesses make data-driven decisions. Companies using AI-powered analytics report a 25% increase in decision-making speed, leading to better strategic outcomes.

5. Faster Innovation

AI infrastructure fosters innovation by enabling advanced AI models for predictive analytics, automation, and personalization. 78% of organizations now use AI, with leading industries such as finance (61%), tech (85%), and retail (68%) leveraging AI for competitive growth. (AI Infrastructure Alliance)

Implementation Strategies for AI Infrastructure

To successfully deploy AI infrastructure, businesses must follow structured implementation strategies that ensure scalability, security, and efficiency. AI infrastructure is not a one-size-fits-all solution; it must be tailored to an organization’s unique operational needs, available resources, and long-term AI objectives.

Companies must invest in the right computing power, optimized data pipelines, and security frameworks to fully leverage AI capabilities.

1. Assess AI Readiness

Organizations must evaluate their current IT ecosystem, available data assets, and AI maturity level before implementing infrastructure upgrades. This ensures businesses identify technology gaps and resource limitations, allowing them to make informed decisions.

2. Invest in AI Talent

Deploying AI infrastructure requires skilled professionals in data science, cloud architecture, and machine learning. Companies should focus on training existing employees, partnering with AI research institutes, and hiring specialized AI engineers to ensure smooth execution.

3. Choose the Right AI Stack

Selecting the right AI tools, frameworks, and computing resources is crucial for achieving optimal model performance. Businesses must assess their hardware needs (GPUs, TPUs), cloud storage capabilities, and model development platforms to align AI infrastructure with their goals.

4. Optimize Data Management

AI models rely on structured, clean, and high-quality data for accurate predictions. Organizations should implement automated data pipelines, streamline data governance policies, and ensure data integrity before feeding AI algorithms.

5. Prioritize Security & Compliance

Since AI handles sensitive business data, organizations must implement robust cybersecurity measures and follow ethical AI regulations. Encryption, access controls, and privacy compliance should be key priorities in AI infrastructure planning.

6. Monitor AI Performance & Continuous Improvement

Deploying AI infrastructure is not a one-time task—it requires constant performance tracking, model refinement, and proactive troubleshooting. Using MLOps frameworks, businesses can identify efficiency bottlenecks and ensure continuous AI optimization.

Best Practices for Successful AI Infrastructure Deployment

To ensure AI infrastructure operates effectively, businesses should adhere to the following best practices:

1. Design a Scalable Architecture

AI workloads will evolve over time, demanding elastic computing power and flexible infrastructure scaling. Organizations should choose cloud-native solutions that provide on-demand scalability and resource allocation flexibility.

2. Standardize AI Governance & Ethical AI Policies

AI systems must be transparent, compliant, and ethically aligned with business goals. Companies should develop AI governance frameworks that outline data usage policies, bias mitigation strategies, and ethical decision-making standards.

3. Implement Cost-Efficient AI Infrastructure

AI infrastructure can be resource-intensive, making cost optimization essential. Businesses should evaluate hybrid cloud solutions, GPU/TPU cost efficiencies, and open-source AI tools to reduce overall expenditure.

4. Foster Cross-Team Collaboration

AI infrastructure deployment requires collaboration between IT, data science, and business strategy teams. Organizations should encourage knowledge sharing, interdepartmental training, and AI adoption workshops to align goals.

5. Build Resilient AI Models

Ensuring model reliability is key to successful AI applications. Businesses should implement fault-tolerant AI infrastructure, leverage edge computing for real-time analysis, and integrate disaster recovery plans.

Give Wings to Your AI Dreams with the Right AI Infrastructure

From compute resources to data pipelines, secure deployments, and compliance, AI infrastructure forms the invisible engine driving today’s most intelligent enterprises. But as powerful as AI can be, its success depends entirely on the strength of the infrastructure behind it.

And that’s where most organizations hit a wall—costly configurations, slow deployment, talent gaps, and fragmented tools to stall progress.

That’s where Quinnox AI (QAI) Studio comes in—your launchpad for AI success.

With over 250+ AI and data experts, 70+ real-world use cases, and 50+ pre-built accelerators, QAI Studio helps organizations leap over infrastructure hurdles. Whether you’re testing AI at a small scale or deploying enterprise-wide initiatives, its pre-configured, scalable environments eliminate the heavy lifting—so your teams can focus on building value, not just systems.

Because the future of AI isn’t just about algorithms—it’s about empowering people with the right infrastructure to create, innovate, and lead.

Get in touch with QAI Studio today and turn your AI ambitions into reality!

FAQs on AI Infrastructure

AI infrastructure refers to the hardware, software, data systems, and networking tools required to support AI applications. It enables efficient data processing, model training, and real-time predictions at scale.

In enterprises, AI infrastructure powers everything from data collection and storage to model development, deployment, and monitoring. It ensures AI systems run smoothly, securely, and with high performance to support business goals.

Core components include high-performance computing (GPUs/TPUs), scalable data storage (data lakes/warehouses), development tools (ML frameworks), model deployment platforms, and governance tools for security and compliance.

It boosts productivity, speeds up innovation, improves decision-making, lowers operational costs, and provides scalable AI capabilities to meet growing business demands.

AI infrastructure is designed for high-speed data processing and complex model training, using tools like GPUs, real-time data streams, and AI-specific governance. Traditional IT focuses more on general computing with slower, sequential processing.