Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

Introduction

Businesses are drowning in a sea of information from countless sources including customer databases, sales records, social media, IoT devices, and more. But raw data alone isn’t enough. Without consistency and clarity, data becomes fragmented, unreliable, and difficult to harness effectively. This is where data standardization steps in as a critical foundation for turning chaos into clarity.

The impact of data standardization resonates across industries, from healthcare improving patient records to finance optimizing risk assessments, and retail personalizing customer journeys. When done right, it unlocks the true value of data, reducing errors, boosting analytics accuracy, and accelerating innovation.

In this blog, we’ll explore best practices for data standardization, real-world use cases demonstrating its power, and examples that highlight how companies are transforming their data into a competitive advantage. Whether you’re just starting your data journey or looking to refine your processes, understanding data standardization is key to thriving in a digital-first world.

What is Data Standardization?

Data standardization is the process of converting disparate data into a consistent format so that all elements across various sources have the same structure and meaning. In practical terms, this means ensuring that units, formats, and labels are unified across the dataset.

Example: If you’re evaluating the impact of commuting on employee satisfaction, and some people report distances in kilometers while others in miles, the analytics system might misinterpret the data unless a common unit is used. By converting all entries to kilometers (or miles), you enable meaningful comparisons and accurate insights.

Standardization not only avoids confusion but also ensures that conclusions drawn from data are valid. It provides uniformity and consistency, acting like metadata – data about data – that gives structure and interpretability to otherwise unstructured information.

How to Standardize Data

Standardizing data is not just about converting formats. It requires a systematic approach involving rules, tools, and ongoing governance. The following business rules are often applied:

1. Taxonomy Rules

Define and restrict allowable values. For instance, if only three product colors are allowed (Red, Green, Blue), these become the standard, and all other values are rejected.

2. Reshape Rules

Transform data structures to align with the target system. A single dataset may need to be split into multiple tables or reformatted to fit the business use case.

3. Semantic Rules

Ensure the data’s meaning aligns with its intended context. For example, the term “status” may have different interpretations across departments; standardization ensures uniform understanding.

4. Tools:

Modern Master Data Management (MDM) platforms and data profiling tools automate much of the standardization process. These platforms profile data, apply rules, and streamline integration.

Data Normalization vs. Standardization: Decoding the Data Discipline

In the realm of data management, terms like normalization and standardization often come up, sometimes used interchangeably but fundamentally different in purpose and process. Understanding the distinction between data normalization and data standardization is crucial for businesses aiming to harness clean, reliable data. While both techniques aim to improve data quality, they tackle different challenges. While normalization focuses on adjusting data values to a common scale, whereas standardization ensures data follows consistent formats and structures.

Decoding these concepts helps organizations implement the right strategies to unlock accurate insights and drive smarter decisions.

Difference Between Data Normalization and Data Standardization

| Aspect | Data Normalization | Data Standardization |

|---|---|---|

| Objective | Adjust values to a common scale, usually between 0 and 1 | Enforce uniform format, semantics, and structure across data systems |

| Primary Purpose | Ensures equal weight in machine learning models, avoiding feature domination | Ensures data compatibility, integration readiness, and compliance |

| Use Cases | Machine learning, statistical analysis, predictive modeling | Data integration, enterprise reporting, regulatory auditing |

| Focus Area | Rescaling numerical values for relative comparison | Consistent representation of data fields and formats across systems |

| Example | Rescaling property size, price, and age in a real estate ML model | Converting all date formats to a standard YYYY-MM-DD format across global teams |

| Transformation Type | Numerical range transformation (e.g., min-max, z-score) | Structural, semantic, and syntactic alignment |

| Tools Used | Min-max scaler, Z-score standardizer, NumPy, Scikit-learn | MDM tools, data profiling tools, dictionaries, ETL platforms |

| Impact on Data | Values change but relationships are maintained | Meaning, structure, and clarity are improved without distorting values |

| When Applied | Before feeding data into models for better model performance | During data integration, cleaning, or preprocessing for business readiness |

Why is Standardizing Data Important?

Standardizing data plays a crucial role in improving communication and collaboration both within and between organizations. When everyone follows consistent data standards, teams can easily share, interpret, and use information without confusion or the need for time-consuming reformatting. This uniformity accelerates workflows and significantly reduces the risk of costly errors caused by inconsistent or misaligned data.

Beyond improving teamwork, standardized data is essential for compatibility with automated tools and advanced technologies like machine learning and artificial intelligence. These systems depend on clean, well-structured data to generate accurate, actionable insights, making standardization a foundational step in modern analytics.

Additionally, many industries operate under stringent regulations regarding data accuracy, privacy, and reporting. Maintaining standardized data helps organizations comply with these requirements, simplifying audits and regulatory checks by ensuring information is consistently recorded and managed.

Regarding logistic regression, while the algorithm itself does not strictly require data standardization, applying it can greatly enhance the training process. When optimization methods such as gradient descent or Newton-Raphson are used, standardizing features enables faster and more reliable convergence of the learning algorithm. Although logistic regression can still work with unstandardized data, preprocessing with standardization often leads to improved model performance especially when feature scales vary widely.

Top Data Standardization Techniques (with Examples)

Data standardization involves applying specific techniques to bring diverse datasets into a consistent, uniform format, improving accuracy and usability. The choice of method depends on the type of data and the intended purpose. Here are some widely used techniques:

1. Z-Score Normalization:

One critical aspect of standardization in analytics is the standardization of numerical data, especially scores. The Z-score method is a widely used approach to normalize and compare values across different scales.

This method rescales numerical data based on its mean and standard deviation, converting values into standard deviations from the mean. For example, if you have test scores with different ranges, z-score normalization can standardize them, so they are comparable across datasets.

A Z-score standardizes values by showing how many standard deviations a value is from the mean:

[ Z = (X – μ) / σ]

Where: – (X ) = the individual data point – (μ ) = the mean – (σ ) = the standard deviation

Standard scores have a mean of 0 and a standard deviation of 1. This method is especially helpful when comparing variables with different units or scales, as it brings everything into a common frame of reference.

2. Min-Max Scaling:

This technique transforms numerical values to fit within a fixed range, typically 0 to 1. For instance, sales figures ranging from 100 to 10,000 can be scaled so that 100 becomes 0 and 10,000 becomes 1, allowing algorithms sensitive to scale to function effectively.

3. One-Hot Encoding:

Categorical variables are converted into binary vectors, where each category is represented by a separate column with 0s and 1s. For example, a “Color” attribute with values “Red,” “Blue,” and “Green” becomes three columns – each indicating presence or absence of that color.

4. Data Parsing and Formatting:

Dates, phone numbers, and addresses often come in multiple formats. Standardization involves converting all entries into a single format such as YYYY-MM-DD for dates or E.164 for phone numbers to ensure consistency across systems.

5. Regular Expressions:

Powerful pattern-matching tools like regex help clean and validate data by detecting unwanted characters, correcting formats, or extracting relevant information. For example, regex can enforce that all email addresses follow the same structure.

6. Lookup Tables and Dictionaries:

To align variations of terms, organizations use mapping tables. For example, abbreviations like “NY,” “N.Y.,” and “New York” can all be mapped to a single standardized value, preventing duplication and confusion.

7. Metadata Tagging:

Adding descriptive metadata to data entries provides additional context and traceability. Tags might include source information, timestamps, or data sensitivity levels, which help maintain data quality and support governance.

Each of these techniques plays a crucial role in cleaning and harmonizing data, enabling businesses to work with accurate, reliable information that drives better decisions.

Use Cases of Data Standardization

Data standardization is a critical process that supports a wide range of business functions across industries. By ensuring data consistency and accuracy, organizations can unlock deeper insights and streamline operations. Here are some key use cases where data standardization proves invaluable:

Utility

Utilities handle vast amounts of data from smart meters, billing systems, and customer service platforms. Standardizing this data ensures accurate consumption tracking, timely billing, and streamlined outage management. It also supports regulatory compliance by maintaining consistent reporting formats.

Logistics

In logistics, data comes from shipping manifests, GPS tracking, warehouse inventories, and delivery schedules. Standardization allows for real-time visibility into supply chains, reduces errors in shipment data, and improves coordination between carriers, suppliers, and customers.

BFSI (Banking, Financial Services, and Insurance)

The BFSI sector processes diverse data such as transaction records, customer profiles, risk assessments, and regulatory filings. Data standardization helps unify formats across disparate systems, enhances fraud detection accuracy, and simplifies compliance reporting, reducing operational risks.

Environment & Energy

Environmental monitoring and energy management rely on sensor data, weather reports, and consumption metrics from various sources. Standardizing this data ensures reliable analysis for forecasting demand, optimizing resource allocation, and supporting sustainability initiatives.

Manufacturing

Manufacturers gather data from production lines, quality control, supply chains, and equipment sensors. Standardized data facilitates real-time monitoring, predictive maintenance, and quality assurance, leading to improved efficiency and reduced downtime.

Retail

Retailers collect customer data, sales transactions, inventory levels, and supplier information from multiple channels. Standardization enables personalized marketing, accurate inventory management, and seamless integration across online and offline platforms, enhancing the overall customer experience.

By adopting data standardization, these industries can overcome data silos, improve operational efficiency, and make more informed decisions, paving the way for innovation and growth in an increasingly data-centric world.

Common Challenges in Standardizing Data

While data standardization is essential for ensuring data quality and consistency, organizations often face several hurdles during its implementation. Understanding these challenges can help in building effective strategies to overcome them:

1. Diverse Data Sources and Formats

Organizations typically collect data from numerous systems, each with its own structure and format. Integrating and standardizing such heterogeneous data requires significant effort to reconcile differences and create a unified view.

2. Inconsistent Data Quality

Data often comes with errors, missing values, or outdated information. Standardizing data with underlying quality issues can propagate mistakes, making it crucial to cleanse and validate data before standardization.

3. Complex Data Relationships

In many cases, data elements are interconnected in complex ways. Ensuring that standardization preserves these relationships without loss of meaning or context adds an extra layer of difficulty.

4. Evolving Business Rules

Business processes and data requirements evolve over time. Maintaining standardized data while adapting to changing rules and definitions demands ongoing governance and flexibility in standardization approaches.

5. Resistance to Change

Standardizing data may require altering established workflows and systems. This can face resistance from stakeholders who are accustomed to legacy processes or skeptical about the benefits of standardization.

6. Scalability and Performance Issues

As database grows, standardization processes must scale efficiently. Handling large datasets without compromising performance requires robust infrastructure and optimized algorithms.

7. Lack of Clear Standards

In some domains, agreed-upon data standards may be absent or fragmented. Creating consistent standards from scratch or aligning with external frameworks can be time-consuming and complex.

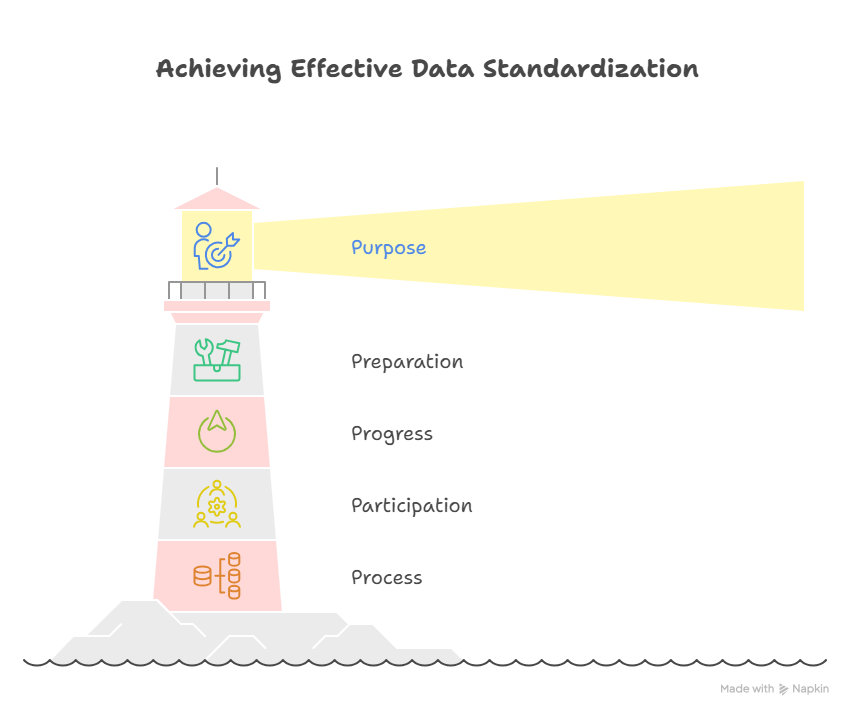

The 5P Framework for Effective Data Standardization

The 5P Framework offers a practical lens to guide organizations through their data standardization journey:

1. Purpose

Start with a clear understanding of why you’re standardizing data. Whether it’s to improve data quality, enable system integration, support compliance, or power analytics defining the end goal ensures alignment and prioritization. Purpose acts as the foundation that shapes all decisions and measures success.

2. Policies

Establish governance policies that define standards, ownership, and accountability. These should include naming conventions, format rules, validation protocols, and update cycles. Strong data policies prevent inconsistencies from creeping in and create a reliable structure for teams to follow.

3. People

Effective data standardization requires the involvement of the right stakeholders including data stewards, business analysts, IT teams, and end users. Cross-functional collaboration ensures that standards are practical, adopted widely, and supported across departments.

4. Processes

Build repeatable and scalable workflows for implementing and maintaining data standards. This includes processes for data ingestion, transformation, validation, and exception handling. Automating parts of the process can enhance speed and consistency.

5. Platforms

Select the right tools and technologies that support your standardization efforts. This includes ETL tools, data catalogs, validation engines, and master data management (MDM) platforms. Platforms should be flexible enough to evolve with changing data and business needs.

Conclusion

From overcoming integration challenges to enabling AI, analytics, and automation, standardized data lays the foundation for smarter decisions and faster growth. As companies strive to turn data into a true competitive advantage, real-world examples show that success begins with structure.

At Quinnox, our AI & Data services are purpose-built to help businesses standardize, govern, and optimize their data with precision. Using modern tools, domain expertise, and AI-driven automation, we transform disjointed data into unified insights, making your data not just usable, but valuable.

FAQs Related to Data Standardization

It ensures consistency across data sources, reduces errors, improves integration, and enables accurate analysis and informed decision-making.

Standardization focuses on uniform format and meaning; normalization adjusts numerical values to a common scale or restructures databases to reduce redundancy.

Not necessarily, but standardizing features can improve optimization and convergence speed during model training.

Taxonomy rules: Restrict values to predefined sets.

Reshape rules: Adjust data structure for target systems.

Semantic rules: Ensure data aligns with business context and meaning.

Yes, modern tools like MDM platforms and ETL pipelines support rule-based and AI-assisted automation to streamline the process.