Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

Artificial Intelligence has moved from experimentation to execution. Enterprises are no longer debating whether to invest in AI, but how to make it deliver business value at scale. Yet despite record spending, most organizations struggle to achieve enterprise-wide adoption. According to McKinsey, fewer than 20% of AI initiatives move beyond pilot projects, with inadequate infrastructure cited as one of the top roadblocks.

The reason is clear: algorithms alone do not drive business outcomes. Models may perform well in test environments but falter in production if the infrastructure cannot handle massive datasets, rapid retraining cycles, or low-latency decision-making. Poorly designed infrastructure leads to spiraling costs, failed deployments, and stalled innovation.

Meanwhile, competitors that build strong AI foundations are seeing measurable advantages—faster time-to-market, reduced risk, improved compliance, and higher ROI. For executives, the question is not whether AI infrastructure matters, but how to choose the right infrastructure that aligns with business priorities today while scaling for tomorrow.

This blog is designed as a comprehensive AI infrastructure guide. We will explore how to evaluate options, compare deployment models, and learn from real-world examples across industries. We’ll also walk through a client case study that shows the business value of strategic investments in infrastructure. Finally, we’ll share a decision-making framework for CTOs and close with practical takeaways.

How to Choose the Right AI Infrastructure?

Choosing AI infrastructure requires a structured approach. It is about much more than hardware or cloud contracts; it involves aligning technical decisions with business goals, regulatory requirements, and future growth plans.

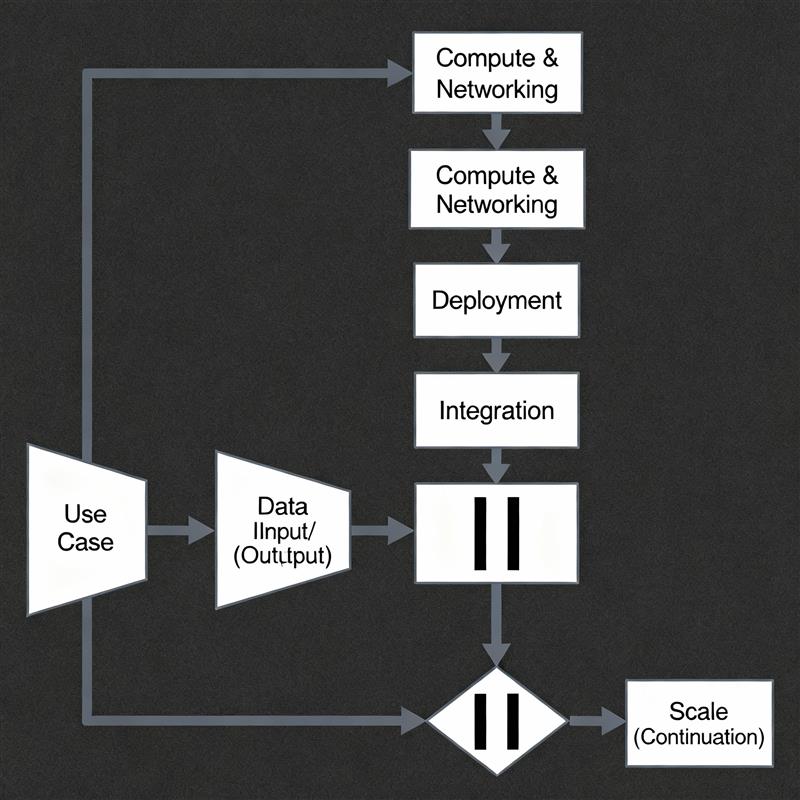

1. Clarify the Use Case

Not all AI workloads are created equal. The infrastructure needed to train a generative AI model differs dramatically from the requirements of running real-time inference in production.

- Large-scale deep learning and LLM training demand clusters of GPUs or TPUs optimized for parallel processing.

- Lightweight inference tasks such as chatbot responses can often run efficiently on CPUs.

- Real-time scenarios like fraud detection or autonomous driving require ultra-low latency to deliver immediate results.

By mapping workload characteristics upfront, enterprises avoid over-investing in expensive compute that sits idle or under-investing in systems that cannot meet demand.

2. Evaluate Data Requirements

AI is fueled by data, but the infrastructure must match the scale and sensitivity of the data being processed.

- High-volume enterprises such as e-commerce or IoT-driven industries require scalable storage and high-throughput networks.

- Highly regulated sectors such as banking and healthcare may require local data residency and strict access controls.

- Hybrid data strategies are becoming common, where sensitive data stays on-premises while non-sensitive workloads move to the cloud.

Equally important is efficient management of data. Enterprises that streamline pipelines, maintain clean and curated datasets, and reduce duplication unlock significant performance advantages. Creating a single source of truth for multiple business units reduces redundancy, accelerates training cycles, and improves inference accuracy. Efficient data management is increasingly seen as a prerequisite for enabling AI at scale (source).

3. Select Compute, Networking, and Storage Resources

Modern AI infrastructure must be viewed as a stack of layers that extend beyond compute. Each layer plays a critical role in efficiency:

- Computer Hardware: CPUs for general workloads, GPUs for deep learning, and TPUs or other accelerators for large-scale training. Newer Data Processing Units (DPUs) can offload networking, storage, and security tasks from CPUs/GPUs, freeing them to focus on model training and inference.

- Networking: AI workloads often move vast amounts of data between compute and storage. High-bandwidth, low-latency interconnects like InfiniBand or high-speed Ethernet (200G/400G) prevent bottlenecks during distributed training.

- Storage Architecture: Tiered storage ensures efficiency—fast NVMe/flash for active model training, economical storage for archival data, and vector databases for embeddings and similarity searches (important for retrieval-augmented generation use cases).

By designing with all three layers in mind, enterprises avoid the trap of focusing only on compute power while overlooking data transfer and storage bottlenecks.

4. Choose Deployment Models

The three dominant models – on-premises, cloud, and hybrid – each have advantages and trade-offs. Enterprises should assess them not only by cost, but also by scalability, compliance, and integration ease.

- On-premises – maximum control, lowest latency, but high capex and long lead times

- Cloud – elastic scalability, pay-as-you-go, but compliance and long-term cost predictability are challenges

- Hybrid – balance workloads strategically, mix compliance with flexibility – though governance adds complexity

Trade-offs: Cost, Scalability, Latency, Compliance

- Cost: On-prem is capital-intensive, cloud shifts costs to operations, hybrid balances both.

- Scalability: Cloud provides near-infinite scalability, on-prem is limited, hybrid enables selective scale.

- Latency: On-prem minimizes latency, cloud can introduce variability, hybrid enables optimization.

- Compliance: On-prem gives maximum control, cloud compliance depends on certifications, hybrid offers flexibility with oversight.

5. Plan for Integration

AI infrastructure cannot be a silo. It must integrate with:

- Existing IT ecosystems (ERP, CRM, data lakes).

- APIs and microservices architectures.

- AI and data services for training, testing, and inference pipelines.

Integration ensures that models can be trained, deployed, and monitored seamlessly without disrupting operations.

6. Future-Proof the Infrastructure

AI evolves faster than most technology domains. Enterprises that design infrastructure with modularity and automation in mind will adapt more easily to new frameworks and workloads.

- Modularity: enables swapping components without full rebuilds.

- Automation: ensures continuous retraining, deployment, and monitoring.

- Alignment with business strategy: ensures that infrastructure can support emerging demands like AI and ML for generative applications, digital twins, or multimodal AI.

A Comparison of AI Infrastructure Models

| Model | Pros | Cons | Best For |

|---|---|---|---|

| On-Premises | Maximum control, low latency, full compliance | High upfront cost, long setup, heavy maintenance | Highly regulated industries |

| Cloud | Elastic scale, rapid deployment, OPEX model | Unpredictable costs, provider-dependent compliance | Startups, scale-ups, variable demand |

| Hybrid | Balance cost, scale, compliance | Complex governance & integration | Enterprises with mixed workloads |

Real-World Examples of AI Infrastructure in Action

AI infrastructure decisions are not theoretical. Enterprises across industries are already seeing tangible business results when they align infrastructure with strategic goals.

Here are several case studies that illustrate how organizations are unlocking value through the right AI foundations

Example #1: Environmental Services: Smarter Waste Management

A North American environmental services company deployed AI-enabled smart cameras in waste collection trucks to analyze whether dumpsters were overloaded. Images captured during collection were processed in the cloud using deep learning pipelines and location tagging.

The system not only brought transparency to billing but also reduced revenue leakage by $2 million annually. By integrating IoT, cloud compute, and automated data pipelines, the company improved driver efficiency, safety, and customer trust.

Curious about the full journey behind this transformation? Read the success story here

Example #2: Fragrance & Flavours: Generative AI for Product Innovation

A global fragrance manufacturer faced bottlenecks in manually matching customer briefs to internal formulation libraries.

By building a GenAI-powered recommendation engine on Azure, integrated with its product lifecycle systems, the company boosted response efficiency by 60 to 70 percent. The system not only increased speed but also ensured regulatory compliance by automatically cross-referencing formulations with legal requirements.

This case shows how modular cloud infrastructure enables adoption of generative AI in highly specialized domains.

Example #3: Global Banking: Compliance at Scale

For a leading global bank, regulatory compliance was a manual, error-prone process that consumed thousands of hours each year.

The bank implemented an AI-powered compliance platform built on AWS, using LangChain and SageMaker to automate document ingestion, tagging, and summarization. The result was faster compliance reviews, reduced human error, and improved regulatory agility.

By standardizing on cloud-based infrastructure with scalable pipelines, the bank transformed compliance into a competitive advantage.

Decision-Making Framework for CTOs

Building AI infrastructure isn’t a “one-size-fits-all” exercise. CTOs need a structured approach that balances business impact, technical feasibility, and long-term resilience. Here’s a deeper look at each stage of the framework:

1. Assess Current Maturity

Before making investments, benchmark the organization’s current infrastructure against AI ambitions.

- Key Questions: Can existing systems support model training? Are there bottlenecks in data access, storage, or compute?

- Practical Step: Run a maturity assessment that evaluates hardware capacity, data pipelines, governance processes, and team readiness.

-

- Why it matters: Without a baseline, it’s easy to overbuild or underinvest. For example, deploying GPU clusters makes little sense if data pipelines are still siloed or inconsistent.

2. Define Cost Models

Total cost of ownership (TCO) must balance capital expenditure (CapEx) for on-prem hardware and operational expenditure (OpEx) for cloud services.

- Considerations: On-prem offers control but locks up capital; cloud offers elasticity but can become unpredictable over time.

- Practical Step: Model costs over a 3–5 year horizon, factoring in growth of workloads, retraining cycles, and storage.

- Why it matters: Many AI pilots stall because infrastructure costs scale faster than expected. A transparent cost model aligns CFO and CTO priorities.

3. Align Data Strategy

Infrastructure is only as effective as the data it supports.

- Governance: Define clear ownership, access rights, and lineage for datasets.

- Compliance: Address residency, privacy (GDPR, HIPAA), and sector-specific mandates early.

- Pipelines: Standardize ingestion, transformation, and storage practices to avoid duplication.

- Why it matters: Clean, governed, and compliant data pipelines reduce rework, lower risk, and accelerate model accuracy. Without this alignment, AI efforts often collapse under regulatory or operational pressure.

4. Plan for the AI Roadmap

Infrastructure choices must anticipate emerging demands.

- Generative AI: LLMs and multimodal models require high-performance compute and vector databases for RAG.

- Digital Twins: Demand scalable simulation environments and high-bandwidth networking.

- Multimodal AI: Involves combining text, images, audio, and video, pushing storage and compute requirements higher.

- Why it matters: CTOs who design only for today’s pilot use case risk constant rebuilds. Aligning infrastructure with the 3–5 year roadmap ensures longevity and flexibility.

5. Pilot and Iterate

The most successful AI programs start small but scale fast.

- Pilot: Select high-value, low-risk use cases to validate the infrastructure.

- Measure: Track latency, throughput, accuracy, and business KPIs.

- Scale: Once validated, expand the same infrastructure patterns across other workloads.

- Why it matters: This avoids “big bang” failures and allows infrastructure investments to pay back quickly. For example, a bank may begin with fraud detection pilots before scaling to credit scoring and customer personalization.

Conclusion:

AI infrastructure is more than technology -it is a foundation for business competitiveness. Organizations that invest wisely will accelerate innovation, comply with regulations, and achieve sustainable ROI. Those that delay risk stalled projects and competitive disadvantage.

Whether you are a fast-growing startup looking to scale AI pilots into production, or an established enterprise seeking to modernize infrastructure and improve model performance, the right approach makes all the difference. At Quinnox, our Gen AI and Data-Driven Intelligence services are designed to help organizations build infrastructure that is modular, efficient, and future-ready.

If your organization is ready to turn AI infrastructure into a catalyst for innovation and measurable outcomes, connect with our experts today. Together, we can help you design and implement AI foundations that deliver both agility and competitive advantage.

Reach our data experts and leverage our Quinnox AI (QAI) Studio today to start your transformation journey!

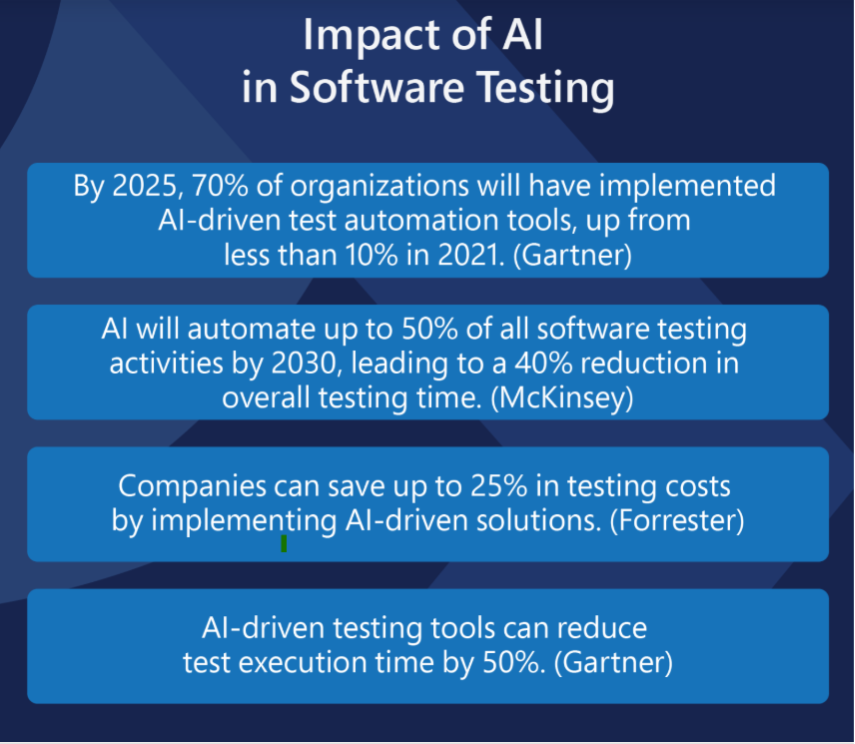

Beyond executing tests, AI agents generate rich data on performance trends, failure patterns, and user interactions. These insights empower development teams to make informed decisions, prioritize fixes effectively, and continuously elevate software quality.

How to Use AI Testing Agents Effectively (Tips and Strategies)

Using AI testing agents effectively means weaving their capabilities into the fabric of your development process with intention and care. When balanced with human insight, these agents can transform testing into a smarter, more agile function – one that not only catches bugs but anticipates them.

Here are some practical approaches to get the most from AI testing agents:

1. Define Clear Objectives and Boundaries

Begin by outlining what success looks like for your testing efforts. AI agents thrive when given specific goals whether it’s reducing test cycle time, improving coverage, or catching elusive bugs early. Establishing boundaries helps these agents focus on meaningful tasks, avoiding wasted cycles on irrelevant or redundant checks.

2. Integrate AI Agents into Existing Workflows

Rather than replacing established processes outright, AI testing agents should augment them. Seamless integration with current tools and pipelines ensures that AI-generated insights complement human expertise, enabling faster feedback loops without disrupting the team’s rhythm.

3. Continuously Train and Update Agents

The strength of AI lies in learning from data, so feeding agents with recent test results, application updates, and user behavior patterns is essential. This ongoing training enables them to evolve alongside the software, maintaining accuracy and relevance even as the codebase shifts.

4. Combine AI Insights with Human Judgment

AI agents excel at pattern recognition and volume, but they lack contextual nuance. Encourage testers to review AI findings critically, using their experience to validate issues and interpret subtle signs that might indicate deeper problems. This collaboration elevates the quality of defect detection and prioritization.

5. Prioritize Test Scenarios with Business Impact

Not all tests carry equal weight. Use AI to identify high-risk areas such as features with frequent changes or critical business functions and prioritize those for deeper scrutiny. This targeted approach maximizes value by aligning testing efforts with what matters most to users and stakeholders.

6. Monitor and Measure AI Performance Regularly

Implement metrics such as defect detection rates, false positives, and time saved to track how well AI agents are performing. Such periodic reviews help uncover blind spots or inefficiencies, allowing continuous refinement of AI strategies.

7. Foster a Culture Open to AI-Driven Change

Successful adoption depends as much on people as on technology. Hence, make sure to encourage curiosity and experimentation within your team, creating an environment where testers see AI agents as collaborators rather than competitors. Training and transparent communication are key to building trust.

Common Challenges and How to Overcome Them

AI agents hold immense promise for transforming workflows, yet integrating them effectively is not without obstacles. Organizations frequently encounter challenges that can limit the impact of these intelligent tools if not addressed proactively. Understanding these hurdles and adopting practical strategies to navigate them is essential for unlocking AI’s full potential.

Challenge 1: "The AI Finds Too Many Issues across Too Many Areas"

Comprehensive AI testing can discover hundreds of issues across functional, performance, security, and accessibility domains, overwhelming teams.

Solution: Implement risk-based prioritization. Configure agents to focus first on issues that impact core business functions, security compliance requirements, or legal accessibility obligations.

Challenge 2: "Different QA Disciplines Have Conflicting Requirements"

Performance optimizations might affect accessibility, security measures might affect usability, and functional changes might break existing tests.

Solution: Use AI agents’ cross-disciplinary analysis capabilities. Modern agents can identify these conflicts and suggest balanced solutions that maintain quality across all dimensions.

Challenge 3: "Our Team Lacks Expertise to Validate AI Results in All Areas"

QA teams might be strong in functional testing but lack deep knowledge in security assessment or accessibility compliance to validate AI findings.

Solution: Partner with AI agents for education. Many 2025 platforms provide detailed explanations of why issues were flagged, helping teams learn while validating results.

Challenge 4: "Integration with Specialized Tools Is Complex"

A comprehensive strategy for QA often requires multiple specialized tools that don’t integrate well with each other or with AI agents.

Solution: Prioritize AI agents with broad integration capabilities or those that provide built-in functionality across multiple QA disciplines, reducing tool sprawl.

Challenge 5: "Maintaining Quality Standards across All Disciplines Is Overwhelming"

Keeping up with evolving standards in accessibility (WCAG updates), security (new vulnerability types), performance (changing user expectations), and functionality (business requirement changes) is difficult.

Solution: Choose AI agents that automatically update their knowledge bases with current standards and best practices across all QA disciplines.

Future of AI Agents in Software QA

The rapid evolution of software development methodologies, the explosion of data, and the increasing complexity of applications have pushed traditional QA methods to their limits. AI agents are emerging as transformative enablers, poised to reshape how quality is assured—making testing smarter, faster, and more aligned with business goals.

At the forefront of this transformation is Qyrus, our end-to-end AI-powered test automation platform that stands out as a visionary enabler in this evolving space. It combines AI-driven test automation with intelligent analytics, providing a unified solution that bridges the gap between manual testing, automation, and AI-powered insights.

Ready to elevate your testing to the next level? Explore our software testing solutions and experience the future of quality assurance today.

FAQs About AI Infrastructure

Future-proofing requires designing with flexibility, scalability, and automation in mind. Start with modular architectures where compute, networking, and storage can be upgraded independently. Build automation into pipelines so data ingestion, training, testing, and deployment are continuous and require minimal manual intervention. Adopt hybrid and multi-cloud strategies that prevent vendor lock-in and ensure agility as business needs evolve. Finally, align infrastructure with long-term enterprise strategy, ensuring it can support emerging workloads like generative AI, multimodal applications, and real-time analytics.

Examples include centralized AI platforms that unify tools across business units, smart cities using digital twins to model traffic and climate resilience, banks deploying hybrid setups for real-time fraud detection, and hospitals combining GPU clusters with secure data lakes for diagnostics and drug discovery. These illustrate how infrastructure choices vary across industries but always tie back to scalability, compliance, and performance.

By matching workloads to the right environment, organizations can avoid overpaying for idle compute or under-investing in critical capacity. Hybrid models allow sensitive or predictable workloads to stay on-premises while elastic cloud resources handle spikes, balancing cost and performance.

Yes, in certain scenarios. Serverless works well for lightweight inference tasks such as chatbots, recommendation systems, or API-driven predictions, where workloads are event-based and scaling is automatic. However, for training large models or managing data-heavy workloads, serverless alone is insufficient. These require dedicated compute clusters, high-bandwidth networking, and specialized accelerators. The future likely involves a hybrid model, where serverless infrastructure handles inference at scale while dedicated infrastructure supports heavy training and data preparation.

Yes, but at the right scale. SMBs can start with cloud-first or serverless approaches to test ideas quickly without heavy upfront costs. As AI maturity grows, modular and scalable infrastructure can be added to support larger, more complex workloads.

CTOs should evaluate infrastructure through business alignment, compute and networking needs, data management, cost models, scalability, and compliance. Balancing these ensures infrastructure can handle current pilots while supporting enterprise-scale adoption in the future.