Accelerate IT operations with AI-driven Automation

Automation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Driving Innovation with Next-gen Application Management

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AI-powered Analytics: Transforming Data into Actionable Insights

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

Remember when every software release felt like a gamble? Updates often carried the risk of breaking existing functionality, slowing delivery, or frustrating users. Human-driven testing, though invaluable, struggled to keep pace with the speed and complexity of modern applications.

What if you could anticipate issues before they surfaced, automate repetitive checks, and deliver flawless user experiences faster than ever? That’s exactly what Artificial Intelligence (AI) brings to software testing.

AI isn’t replacing human creativity—it’s augmenting it. Think of AI as a tireless partner that can analyze massive datasets, spot hidden patterns, and execute complex tests with precision. The result: fewer bugs, faster releases, and more time for teams to focus on innovation.

In today’s digital-first economy, where a single glitch can trigger financial and reputational damage, reliable software is no longer optional; it’s mission-critical.

Let’s explore how AI is reshaping testing and uncover the top 10+ benefits of AI in software testing.

What is AI Model Testing

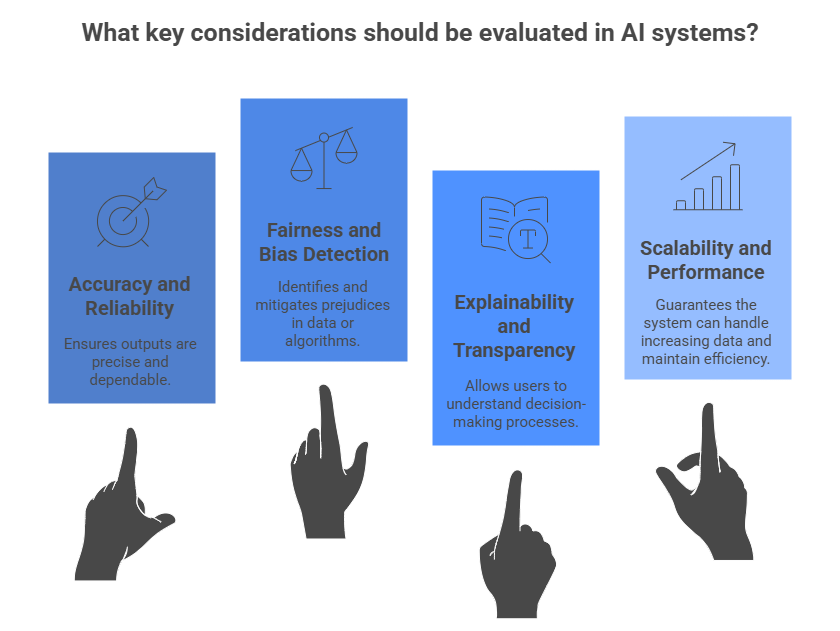

Before we dive into the benefits, let’s clarify what we mean by “AI model testing”. AI model testing goes beyond checking if a feature works. It evaluates the “brain” of an AI system – its algorithms, data inputs, and learning process. This includes ensuring accuracy, fairness, robustness, and reliability.

For example, testing an AI-powered recommendation engine means not just verifying that results display correctly, but also ensuring recommendations are relevant, unbiased, and effective.

Because AI learns from data (rather than following explicit rules), specialized testing is required to catch issues like bias, fragility, and drift. This ensures AI-driven applications remain trustworthy and effective across real-world scenarios.

Types of AI Model Testing

The diverse nature of AI models calls for multiple testing approaches. Just as a carpenter uses different tools for different tasks, a robust AI testing strategy requires a varied toolkit. To make these concepts easier to visualize and present, we can group the key types of AI model testing into three core pillars: Performance & Reliability, Ethics & Trust, and Integration & Security.

1.Performance & Reliability

This pillar focuses on ensuring the AI model functions correctly, efficiently, and consistently under various conditions.

a. Functional Testing

Ensures the model performs its intended tasks correctly. For example, an image classification model should consistently assign the right labels to input images.

b. Performance Testing

Evaluates speed, scalability, and resource efficiency. This testing determines how quickly the model processes data, whether it can handle high request volumes, and if it maintains performance under stress.

c. Accuracy Testing

Measures how well the model’s predictions align with the ground truth. Typically assessed with an unseen dataset, this includes metrics such as precision, recall, and F1-score.

d. Robustness Testing

Assesses the model’s reliability under unexpected or imperfect conditions—such as noisy data, missing values, or adversarial attacks. This ensures stability in real-world, unpredictable environments.

2. Ethics & Trust

This pillar addresses the critical human and societal aspects of AI, ensuring models are fair, transparent, and accountable.

a. Bias and Fairness Testing:

Examines whether the model delivers equitable outcomes across different demographic groups. It identifies and helps mitigate discriminatory patterns that may exist in predictions.

b. Explainability Testing (XAI):

Addresses the “black box” challenge by uncovering why a model makes specific decisions. This is critical for building trust, debugging, and meeting regulatory requirements in sensitive fields like healthcare or finance.

3.Integration & Security

This pillar ensures the AI model can operate securely within a broader system and interact seamlessly with other components.

a. Security Testing:

Identifies vulnerabilities that attackers could exploit, including data poisoning or model inversion attacks. Security testing safeguards both the model and its training data.

b. Interoperability Testing:

Validates that the AI model can integrate seamlessly with other systems, APIs, and platforms crucial for deployment in complex, real-world ecosystems.

Each of these testing types plays a crucial role in building confidence in AI systems and ensuring they deliver on their promise responsibly and effectively.

Benefits of AI in Software Testing

The integration of AI into software testing isn’t just an incremental improvement; it’s a paradigm shift that unlocks a multitude of profound benefits. These advantages translate directly into higher quality software, faster release cycles, reduced costs, and ultimately, a more competitive edge for organizations.

Here are over 10 top benefits of leveraging AI in software testing:

1. Enhanced Accuracy and Precision:

AI algorithms can analyze vast datasets and identify subtle patterns that human testers might miss. This leads to more precise bug detection and a higher level of accuracy in test execution, significantly reducing the likelihood of critical defects reaching production.

2. Accelerated Test Cycles:

One of the most significant advantages is the speed at which AI can operate. AI-powered testing tools can execute tests, analyze results, and generate reports far quicker than manual methods, drastically shortening test cycles and enabling faster time-to-market.

3. Improved Test Coverage:

AI can intelligently explore various test paths and scenarios, including edge cases that might be overlooked by human testers. This leads to a more comprehensive test coverage, ensuring a wider range of functionalities and potential vulnerabilities are addressed.

4. Reduced Human Error:

Even the most meticulous human testers can make mistakes due to fatigue, oversight, or repetitive tasks. AI eliminates these human errors, ensuring consistent and reliable test execution every time.

5. Cost Efficiency:

While there’s an initial investment, AI in testing ultimately leads to significant cost savings. By automating repetitive tasks, reducing manual effort, and catching bugs earlier in the development cycle (where they are cheaper to fix), organizations can optimize their testing budget.

6. Early Bug Detection:

AI can be integrated throughout the development pipeline, allowing for continuous testing and the identification of defects at very early stages. Catching bugs early is critical, as the cost of fixing them exponentially increases the later they are discovered.

7. Smarter Test Case Generation:

AI can analyze historical data, code changes, and user behavior to intelligently generate new, effective test cases. This moves beyond predefined scripts and creates more relevant and impactful tests.

8. Predictive Analytics for Quality:

AI can predict potential areas of risk and vulnerability in the software based on past data and code complexity. This allows teams to proactively allocate testing resources to critical areas, preventing issues before they arise.

9. Optimized Test Suite Maintenance:

As software evolves, test suites often become bloated and difficult to maintain. AI can help identify redundant or outdated test cases, recommending optimizations to keep the test suite lean, efficient, and relevant. With solutions like Shift Smart with IQ, teams can accelerate maintenance, cut waste, and stay release-ready at all times.

Explore how Shift Smart with IQ can help you streamline maintenance and stay agile

10. Enhanced Test Data Management:

Generating realistic and comprehensive test data is often a bottleneck. AI can create synthetic test data that mimics real-world scenarios, ensuring robust testing without compromising sensitive user information.

11. Self-Healing Tests:

Some advanced AI-powered testing tools can automatically adapt to minor UI changes or element locators in the application under test. This “self-healing” capability reduces the need for constant test script maintenance, saving considerable time and effort.

12. Improved Test Reporting and Analysis:

AI can process test results rapidly, identify trends, and generate insightful reports, offering a clear and concise overview of the software’s quality status. This enables faster decision-making and more effective communication within development teams.

13. Accessibility Testing Enhancements:

AI can assist in identifying accessibility barriers in software, ensuring that applications are usable by a wider range of individuals, including those with disabilities.

14. User Experience (UX) Analysis:

Beyond just finding bugs, AI can analyze user interaction patterns and predict areas of friction or confusion in the user experience, leading to more intuitive and user-friendly applications.

By harnessing these benefits, organizations can not only elevate the quality of their software but also streamline their entire development lifecycle, leading to greater innovation and customer satisfaction. The shift towards smarter, AI-driven testing is no longer an option but a strategic imperative for staying ahead in the competitive digital landscape. Platforms like Qyrus, which focus on comprehensive testing solutions, exemplify how these benefits can be integrated into a robust testing strategy.

Framework for AI Model Testing

Implementing AI model testing effectively requires a structured approach. A well-defined framework ensures that all critical aspects of the AI model are thoroughly evaluated, from its initial design to its ongoing operation. Here’s a typical framework for AI model testing:

1.Define Testing Objectives:

- Setting Expectations: Clearly articulate what aspects of the AI model need to be tested (e.g., accuracy, fairness, robustness, performance).

- Establishing Target Metrics and Acceptance Criteria: Identify the target metrics and acceptable thresholds for each objective.

- Assessing Business Impact of Model Failures: Understand the business impact of potential model failures.

2.Data Preparation and Validation:

- Training Data Validation: Ensure the quality, representativeness, and cleanliness of the data used to train the AI model. Check for biases, errors, and inconsistencies.

- Test Data Generation: Create independent and diverse test datasets that accurately reflect real-world scenarios and edge cases the model will encounter. This may involve generating synthetic data or using hold-out sets from the original data.

3.Model Evaluation and Benchmarking:

- Baseline Performance: Establish a baseline for the model’s performance using standard metrics (e.g., accuracy, precision, recall, F1-score for classification; RMSE, MAE for regression).

- Comparative Analysis: Compare the model’s performance against alternative models or human benchmarks to assess its effectiveness.

- Statistical Significance: Use statistical methods to determine if observed performance differences are significant.

4.Bias and Fairness Assessment:

- Identify Protected Attributes: Determine demographic or other sensitive attributes that need to be assessed for potential bias.

- Fairness Metrics: Apply specific fairness metrics (e.g., demographic parity, equalized odds) to evaluate if the model’s outcomes are equitable across different groups.

- Bias Mitigation Techniques: Implement strategies to reduce or eliminate identified biases in the model or its training data.

5.Robustness and Security Testing:

- Adversarial Attacks: Subject the model to carefully crafted adversarial examples to test its resilience against deliberate manipulation.

- Input Perturbation: Introduce noise, missing values, or slight variations to input data to assess the model’s stability and robustness to real-world imperfections.

- Security Vulnerability Scans: Identify potential security weaknesses that could be exploited by malicious actors.

6.Explainability and Interpretability Analysis:

- Feature Importance: Determine which input features contribute most to the model’s decisions.

- Local Explanations: Understand why the model made a specific prediction for an individual instance.

- Model Visualization: Use visualization techniques to understand the model’s internal workings and decision boundaries.

7.Performance and Scalability Testing:

- Latency and Throughput: Measure the model’s response time and the number of requests it can handle per unit of time.

- Resource Utilization: Monitor CPU, memory, and GPU usage to ensure efficient operation.

- Load and Stress Testing: Evaluate the model’s behavior under peak load conditions.

8.Deployment and Monitoring (Continuous Testing):

- A/B Testing: Compare the performance of different model versions in a live environment.

- Drift Detection: Continuously monitor the model’s performance and data distribution in production to detect concept drift or data drift, which can degrade model accuracy over time.

- Automated Alerts: Set up alerts for performance degradation or anomalous behavior.

This comprehensive framework ensures that AI models are not only functional but also reliable, fair, secure, and performant in real-world applications. It’s an iterative process that continues throughout the model’s lifecycle, emphasizing the importance of continuous vigilance and refinement.

For more insights into how continuous testing and AI intersect, exploring resources like the blog on AI in software testing can be highly beneficial.

Key Challenges in AI Model Testing

AI brings transformative potential to software testing, but unlocking its full value requires overcoming unique hurdles. The very characteristics that make AI powerful – its adaptability, scale, and complexity—also create testing challenges that demand innovative approaches. Recognizing these challenges is the first step to building reliable, trustworthy AI systems.

1. The “Black Box” Problem

Many advanced models, especially deep learning networks, operate with little transparency. Understanding why a model made a decision is often difficult, complicating debugging, validation, and regulatory compliance. This lack of interpretability can erode trust among stakeholders.

2. Data Dependency & Quality Risks

AI’s performance hinges on data—and poor data can cripple outcomes:

- Data Volume → Vast amounts of labeled, high-quality data are required, but acquiring and curating it is costly and time-intensive.

- Data Bias → Biased or incomplete training data leads to unfair or discriminatory results.

- Data Drift → As real-world data evolves, models can degrade unless continuously monitored and retrained.

3. Non-Determinism

Unlike traditional software, AI may not always produce the same output for the same input due to stochastic processes or continuous learning. This unpredictability makes reproducing errors and ensuring consistency more difficult.

4. Evolving Nature of AI

AI models aren’t static. As they adapt, a test suite valid today may be obsolete tomorrow. This necessitates continuous re-evaluation and adaptive testing strategies.

5. Adversarial Vulnerabilities

AI systems can be tricked by maliciously crafted inputs, leading to incorrect predictions. Guarding against adversarial attacks requires rigorous, ongoing security testing.

6. Ambiguity in “Correct” Outcomes

For tasks like natural language processing or image recognition, defining a single correct answer can be subjective. This makes accuracy assessment and oracle creation more complex than in traditional software.

7. Scalability Challenges

As models and datasets grow, so do the computational demands of testing. Ensuring scalability without prohibitive resource costs is a major barrier.

8. Lack of Standardization

Industry-wide standards for fairness, explainability, and testing tools remain immature. The absence of common benchmarks leads to fragmented approaches and inconsistent evaluations.

9. Integration with DevOps/MLOps

Embedding AI model testing into DevOps and MLOps pipelines requires careful architectural design and specialized tooling—an integration many organizations still struggle to achieve.

Addressing these challenges requires more than technical fixes—it demands advanced expertise, robust methodologies, and a culture of continuous adaptation.

This is where a strategic approach to continuous testing, as highlighted in “Why Organizations Need Software Testing,” becomes even more critical, ensuring that AI-driven systems are reliable and trustworthy from inception to deployment.

Best Practices for Effective AI Model Testing

Navigating the complexities of AI model testing requires a strategic, systematic approach. By following proven best practices, organizations can maximize AI’s benefits while mitigating risks, ensuring the delivery of high-quality, reliable, and ethical AI systems.

1.Adopt a Test-First Mindset

Integrate testing from the very beginning of the AI lifecycle—data collection, model design, and training—rather than treating it as an afterthought.

2.Prioritize Data Quality & Management

- Rigorous Data Validation: Clean, validate, and pre-process datasets.

- Bias Detection & Mitigation: Identify and address biases through careful collection and fairness techniques.

- Data Version Control: Treat data as a first-class asset with versioning for reproducibility and traceability

3.Establish Clear Metrics & Baseline

Define precise metrics for accuracy, performance, fairness, and robustness. Set baseline targets to measure ongoing improvements.

4.Build Diverse & Representative Test Datasets

- Separate Training/Validation/Test Sets: Prevent data leakage and ensure unbiased evaluation.

- Edge Cases & Outliers: Include unusual scenarios to test resilience.

- Real-World Data: Mirror production conditions as closely as possible.

5.Automate Testing Wherever Possible

Use AI-powered testing frameworks to automate repetitive tasks like test execution, data generation, and reporting. This frees testers for exploratory and complex validation.

6.Implement Continuous Testing & Monitoring (MLOps)

- Integrate into CI/CD pipelines for ongoing validation.

- Production Monitoring: Track performance, drift, and bias in real-world use.

- Automated Alerts: Detect anomalies and trigger retraining proactively.

7.Focus on Explainability & Interoperability (XAI)

- Understand Model Decisions using explainability techniques.

- Validate Explanations to ensure reliability and consistency.

8.Conduct Robustness & Adversarial Testing

Stress-test models against noisy inputs, subtle perturbations, and adversarial attacks to safeguard performance under unpredictable conditions.

9.Foster Cross-Functional Collaboration

Bring together data scientists, AI engineers, testers, and domain experts to ensure well-rounded validation from multiple perspectives.

10.Regularly Review and Adapt

Continuously refine strategies and tools to align with the rapidly evolving AI landscape. Staying current ensures long-term effectiveness.

Conclusion

AI is reshaping software testing from intelligent test generation and faster execution to proactive bug detection and continuous monitoring. The results: higher accuracy, fewer errors, faster release cycles, and stronger trust in AI-driven outcomes.

Yet, this transformation also brings new challenges like black-box models, data dependencies, evolving algorithms. The solution lies in a structured testing framework and disciplined approach that prioritizes data quality, leverages AI-powered automation, embeds continuous monitoring, and drives collaboration.

The integration of AI into software testing empowers organizations to shift from reactive problem-solving to proactive quality assurance, building systems that are trustworthy, resilient, and future-ready.

Ready to start your AI-driven transformation? Learn how intelligent automation can revolutionize your software quality and accelerate your digital journey. Explore our AI and ML solutions now!

FAQ About AI Model Testing

Traditional software testing primarily focuses on verifying explicit rules and functional requirements of programmed code. AI model testing, on the other hand, evaluates the performance, accuracy, fairness, robustness, and learning behavior of models that learn from data, often without explicitly programmed rules. It delves into the model’s intelligence and its ability to generalize.

AI models learn from data. If the training data is poor quality, biased, incomplete, or unrepresentative, the AI model will learn those flaws and produce inaccurate, unfair, or unreliable results. High-quality, diverse, and clean data is foundational for building and testing effective AI models.

No, AI is highly unlikely to entirely replace human testers. Instead, AI acts as a powerful augmentation tool. It automates repetitive tasks, identifies patterns, and handles large-scale data analysis, freeing human testers to focus on more complex, exploratory, creative, and critical thinking tasks that require intuition, ethical judgment, and deep domain knowledge.

Common metrics include accuracy, precision, recall, F1-score (for classification models), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE) (for regression models), and various fairness metrics (e.g., demographic parity, equalized odds) to assess bias.

Model drift (or concept drift/data drift) occurs when the statistical properties of the target variable, or the relationship between input features and the target variable, change over time in real-world data. This can cause a deployed AI model’s performance to degrade. It’s important in AI testing because it necessitates continuous monitoring of models in production and retraining when significant drift is detected to maintain accuracy and reliability.

AI can analyze historical test data, code changes, user behavior, and application logs to intelligently suggest or generate new, effective test cases. It can identify patterns that indicate areas of high risk or missed coverage, thus optimizing the test suite and making it more efficient.

Explainable AI (XAI) refers to methods and techniques that allow humans to understand the output of AI models. It’s important for testing because it helps unravel the “black box” nature of complex AI models, making it possible to understand why a model made a particular decision. This is crucial for debugging, identifying biases, building trust, and meeting regulatory compliance, especially in critical applications.