According to Gartner, the adoption of multimodal AI technologies is projected to grow exponentially, with an estimated 60% of enterprises implementing multimodal interfaces by 2026.

Multimodal AI represents a paradigm shift in human-machine interaction. Whether through voice-enabled assistants, chatbots, or visual recognition systems, businesses can offer customers a choice of interaction modalities that suit their needs, enhancing engagement and satisfaction. This versatility in communication channels allows for seamless interactions, personalized responses, and real-time assistance, leading to improved customer experiences and loyalty.

As businesses embrace the transformative potential of Multimodal AI, the introduction of OpenAI’s GPT-4o heralds a new era of intelligent systems capable of holistic understanding and dynamic interactions. Unlike traditional models restricted to text, GPT-4o seamlessly integrates text, vision, and audio. This allows it to understand and generate human-like language, process and create images, and comprehend and produce audio – all in one unified system.

What is OpenAI's GPT-4o?

GPT-4o, where the “o” stands for “Omni,” is a multimodal model with text, visual and audio input and output capabilities which can generate text 2x faster and is 50% cheaper compared to the existing model GPT-4T claims OpenAI. Further, in the public announcement, the company also claimed that GPT-4o, their third major iteration of the popular large multimodal model, GPT-4, can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is almost similar to human response time in a conversation.

While in the release demo, the company only showed GPT-4o’s visual and audio capabilities, but the release blog elaborates on how GPT-4o ‘s capabilities go far beyond the previous capabilities of GPT-4 releases. Similar to its predecessors, it has native understanding and generation capabilities across all supported modalities, including video. This multimodal prowess elevates GPT-4o’s understanding of the world.

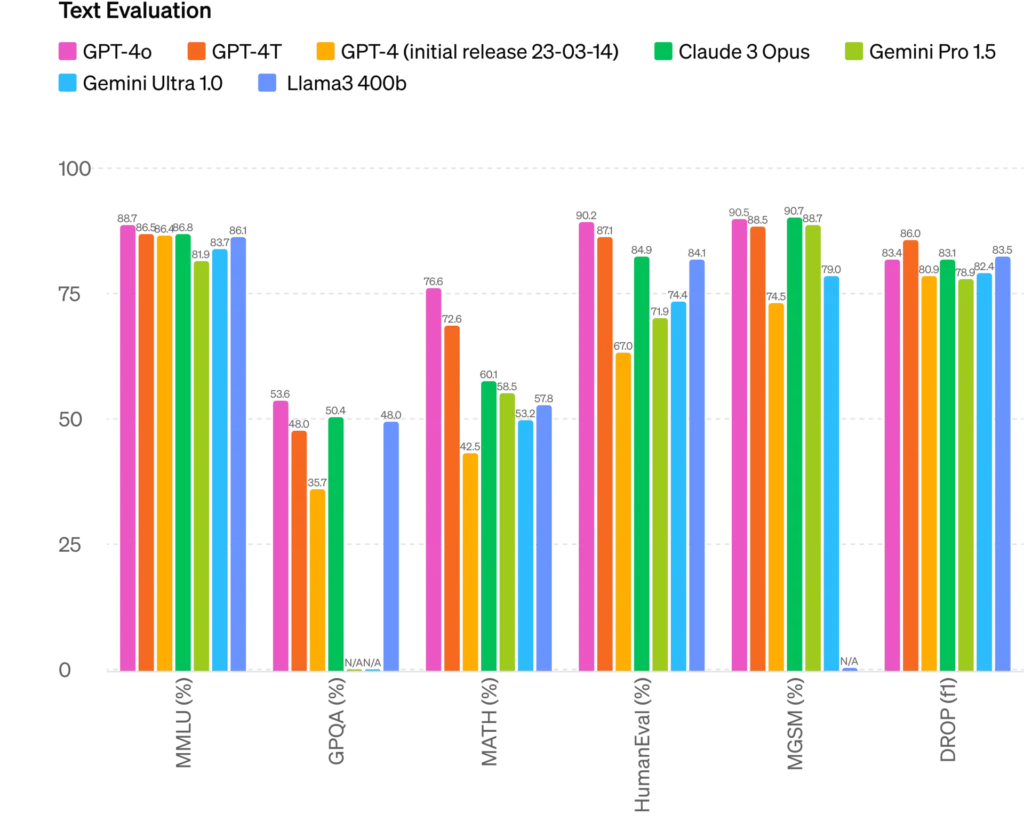

According to self-released text evaluation benchmark results released by OpenAI GPT-4o projected slightly improved or similar scores compared to other LMMs like Anthropic’s Claude 3 Opus, previous GPT-4 iterations, Meta’s Llama3, and Google’s Gemini. The below figure illustrates the same:

Image source- https://openai.com/index/hello-gpt-4o/

However, note that at the time when this text evaluation benchmarks were released, Meta had not completed the training of its 400b variant model and so the outcome is based on the comparison of the 400b variant of Meta’s Llama3.

Demystifying the Power of GPT-4o: A Technical Glimpse

Transformer Architecture:

GPT-4o utilizes the Transformer architecture, a deep learning model adept at processing sequential data. This allows it to identify relationships between different elements of a multimodal input.

Self-Attention Mechanism:

The Transformer architecture employs a “self-attention mechanism” that enables the model to focus on specific parts of the input data relevant to the task.

Pre-training on Massive Datasets:

GPT-4o benefits from being pre-trained on vast amounts of text, image, and audio data. This equips the model with the ability to recognize patterns and relationships within and across modalities.

The Multimodal Revolution: A Universe of Possibilities that GPT-4o Offers to Businesses

Enhanced Customer Service:

Customer service chatbots can analyze screenshots or photos you share to pinpoint the issue, leading to a more streamlined and personalized experience.

Advanced Content Creation:

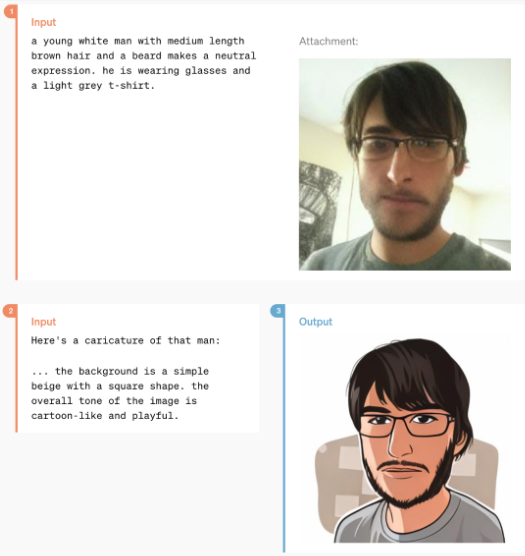

Provide a text description of a new product and have GPT-4o automatically generate an engaging video script, product image, and even a catchy jingle! For instance, the image generation capabilities of GPT-4o are robust, as it is capable of generating images based on a single reference and precise textual descriptions of visual content as depicted below:

Image source- https://openai.com/index/hello-gpt-4o/

Revolutionizing Education:

Imagine learning a new language through an immersive experience with GPT-4o translating spoken audio into text, generating accompanying visuals, and providing interactive exercises.

Supercharged Accessibility:

Multimodal capabilities can bridge the gap for individuals with disabilities. Text-to-speech functionality combined with image recognition can enhance accessibility for visually impaired users, while speech recognition coupled with text generation can empower those with speech limitations.

Boosting Scientific Discovery:

GPT-4o’s ability to analyze complex data sets, including scientific diagrams and audio recordings of experiments, can accelerate scientific research by unearthing hidden patterns and connections.

Coding Assistant on Steroids:

GPT-4o’s capabilities extend beyond just text and visuals. Imagine a coding assistant that not only understands your code but can also listen to your explanations in natural language and translate them into optimized code. This would revolutionize the way programmers work, saving them time and effort.

Transformative Impact of GPT-4o’s Multimodal AI Capabilities

GPT-4o’s ability to understand and interpret multiple data modalities empowers businesses to gain deeper insights into customer preferences, sentiment analysis, and market trends, facilitating more informed decision-making and targeted strategies.

The transformative impact extends to improving customer experiences through tailored interactions, enhanced personalization, and predictive recommendations based on a comprehensive understanding of user behavior across various media formats. GPT-4o’s ability to process information across modalities promises to revolutionize various aspects of our lives:

1. Enhanced Human-Computer Interaction:

Gartner predicts that by the year 2025, approximately 50% of user interactions with digital technology will occur via voice commands. This shift towards voice-driven interactions reflects the growing prevalence and acceptance of voice assistants, smart speakers, and speech-enabled devices in everyday life.

Embracing multimodal AI facilitates more intuitive and natural interactions with AI systems, allowing users to engage through spoken commands, text inputs, and visual cues in a dynamic interaction environment that enhances usability, fosters accessibility, and redefines the boundaries of human-machine collaboration in the digital age.

2. Personalized Experiences:

Multimodal AI enables personalized user experiences by tailoring interactions to individual preferences and needs. By understanding and responding to customer queries, feedback, and requests with human-like fluency and context awareness, GPT-4o can deliver tailored recommendations, support, and solutions that resonate with individual preferences and needs. This level of personalized interaction fosters stronger relationships, boosts customer satisfaction, and cultivates loyalty by providing a seamless and customized experience for each customer.

Imagine a scenario where a user is browsing an online fashion store powered by Multimodal AI. As the user scrolls through different clothing items, the AI system analyzes not only the text descriptions but also the images associated with each product.

Through this multimodal analysis, the AI can understand the user’s preferences more deeply. For instance, if the user consistently hovers over vibrant and casual outfits in the images, the AI can infer that the user prefers trendy and colorful clothing styles.

Based on this analysis, the AI can then personalize the user’s shopping experience by recommending similar items in line with their preferences.

3. Improved Efficiency and Productivity:

Multimodal AI systems like GPT-4o can automate a wide array of manual tasks across different departments within an organization. This automation streamlines workflows, reduces human intervention in repetitive processes, and accelerates the completion of routine activities. From generating content like marketing materials and product descriptions to analyzing customer feedback across various channels, Multimodal AI automates time-consuming tasks, freeing up valuable resources for employees to focus on more strategic initiatives.

For instance, teams can harness the power of voice commands to effortlessly generate comprehensive reports that seamlessly combine text, charts, and images, effectively saving time and boosting operational efficiency. Additionally, by leveraging advanced Multimodal AI features such as GPT-4o, digital marketers within organizations can automate the creation of product descriptions. They can easily use multimodal AI to analyze images of new arrivals and produce engaging, SEO-optimized text descriptions automatically, reducing manual efforts of content creation along with ensuring consistency in tone and style across all product listings.

The Roadmap Ahead: Ensuring Responsible Use of Multimodal AI for a Positive Future

The larger impact of ChatGPT-4o in the future is expected to be significant due to its advanced capabilities and potential applications. While ChatGPT-4o holds immense potential, it also carries risks related to disinformation, cybercrime, and misuse. Striking a balance between innovation and responsible regulation will be crucial for shaping its larger impact on society.

To ensure that multimodal AI like GPT-4o leads to positive outcomes and fosters a thriving ecosystem, stakeholders at large must take proactive measures to mitigate risks and promote ethical, responsible use of this technology.

Steps Enterprises Should Take

Ethical AI Governance:

Businesses utilizing ChatGPT-4o must establish robust governance frameworks that prioritize ethical AI practices, transparency, and accountability. This includes conducting impact assessments, ensuring data privacy and security, and upholding fairness and inclusivity in AI applications.

User Empowerment and Education:

Companies should empower users with awareness and education on interacting with AI systems responsibly. Clear communication on AI capabilities, limitations, and the potential for misinformation can help users make informed decisions and engage with AI technologies mindfully.

Continuous Monitoring and Oversight:

Regular monitoring of AI systems, performance metrics, and feedback mechanisms is essential to detect and address any biases, errors, or malicious activities in real-time. Businesses should implement mechanisms for oversight, auditability, and corrective action to maintain integrity and trust in AI applications.

Steps Government Should Take

Regulatory Frameworks:

Governments play a crucial role in setting regulatory standards and guidelines for the ethical development and deployment of AI technologies. Establishing clear legal frameworks, data protection laws, and accountability mechanisms can ensure that AI systems like ChatGPT-4o are used responsibly and in compliance with legal and ethical norms.

Collaborative Partnerships:

Collaboration between governments, industry stakeholders, academia, and civil society is vital to address the multifaceted challenges posed by AI technology. Joint initiatives, task forces, and research programs can facilitate knowledge sharing, best practices, and risk mitigation strategies to safeguard against misuse of AI.

Public Awareness Campaigns:

Governments should invest in public awareness campaigns to educate citizens about the benefits, risks, and ethical considerations associated with AI technologies. Promoting digital literacy, critical thinking skills, and responsible AI use can empower individuals to navigate the evolving landscape of multimodal AI technologies effectively.

By adopting a proactive and collaborative approach to ensure responsible use of multimodal AI like ChatGPT-4o, businesses and governments can harness the transformative potential of AI technology while mitigating risks and creating a sustainable ecosystem for innovation and growth. Embracing ethical AI principles, transparency, and user empowerment is essential to unlock the full benefits of AI technologies and secure a positive future where AI serves as a force for good in society.

Meanwhile, if your organization is keen to transform your AI strategy to embrace the future possibilities of multimodal AI, you can reach our experts to get started.

Discover how Quinnox can elevate your business with cutting-edge AI/ML solutions that drives growth, efficiency, and innovation. Contact us today!

Authored by Vineet Kumar, Chirag Bhanushali & Chetan Parmar